Arm TechDays 2019

For Cloud and Infrastructure: This is Arms Neoverse Universe

At this year's TechDays of Arm in San Jose, California, Arms' chief architects presented the long-awaited IP components under the brand "Neoverse" for the infrastructure cloud universe. In addition to two new Neoverse CPUs, the focus was on the system solution approach.

Under the Neoverse brand, products are to be offered ranging from primary CPUs in data centers to edge computers and storage media. To date, the IP portfolio for the infrastructure market has not differed significantly from consumer IP, e.g. for smartphones. The new IP portfolio is now called "Arm Neoverse".

Even though the successes in servers in particular have so far been limiert, Arm has been able to gain a significant market share in infrastructure devices overall in recent years. These are all types of non-end user devices, such as network devices, switches, base stations, gateways, routers and, of course, servers. In these markets together, Arms licensees have achieved a market share of up to 28% to date. For example, top-of-rack switches are also listed. Those who build software-defined networks of the current generation generally use x86 CPUs.

As expected, poor has already brought many partners on board, because without sufficient support from the ecosystem Neoverse would be doomed to failure right from the start. In addition to semiconductor manufacturers such as Qualcomm, Broadcom and Marvell, the cloud side includes Alibaba, Tencent and Microsoft, the platform side Nokia, ZTE, Ericcson, Huawei and CISCO, and the telecommunications providers Softbank, Vodafone, Orange and Sprint. On the software side, 18 companies have joined forces, from OS vendors to virtualization, languages&libraries to development tool vendors. Although some key players are still missing, poor was able to get some big names on board before the announcement.

The Neoverse N1 CPU

The undoubtedly most important piece of the puzzle in the new Neoverse universe is a new CPU called N1. In the benchmark SpecInt2006 it achieves a value of 37 per core in its target process of 7 nm of the tawanian Foundry TSMC with the GCC compiler of version 8, in a 64-core hyperscale reference design with 64 cores a value of 1310 is reached and that with a server TDP of only 105 W.

A comparison with Intel's Xeon CPUs, which dominate data centers today, is not easy because SpecInt2006 numbers with GCC compilers are usually not published. What we have found is a figure for the Xeon 8156 from the obsolete 14 nm skylake generation with 24 cores/48 threads, which comes to a SpecInt2006 value of 37 per core as well. The power consumption of the Xeon 8156 is 205 W in a 24-core/48-thread configuration, without the Intel necessary additional chipset. It remains to be seen to what extent the future Intel products of the 10 nm generations Cannon Lake and Ice Lake can achieve the energy efficiency of the Neoverse N1.

The N1 was presented by Mike Filippo, Fellow and Lead Architect at Arms Development Center in Austin, Texas. The development centers for arm CPUs are, as already mentioned several times, located next to Austin at Arms headquarters in Cambridge and in the beautiful Sophia-Antipolis near Nice, where Texas Instruments designed its OMAP processors 10 years ago. Arm's last consumer processor, the Cortex-A76, was designed in Austin, as was the Cortex-A72.

The Neoverse N1 microarchitecture was designed with high performance and energy efficiency in mind and incorporates many elements introduced with the Cortex-A76. However, it has been optimized for infrastructure workloads rather than smartphones. Compared to the Cosmos generation, the Cortex-A72, the N1 achieves 2.5 times the computing power for server workloads with NGINX (a web server under BSD license used by 62.7% of the top 10,000 sites), 1.7 times the performance for Java-based benchmarks (OpenJDK V 11, which alone brought a 14% increase over JDK8), and another 2.5 times the performance for the memcached cache server.

The N1 microarchitecture at a glance

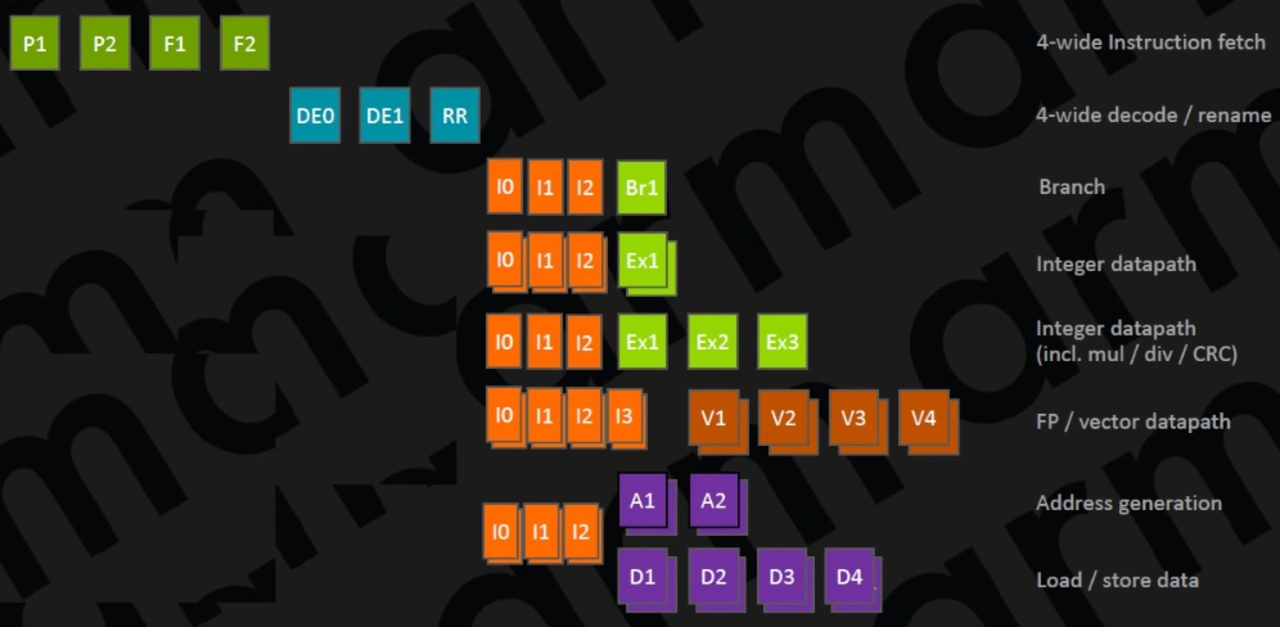

The superscalar out-of-order CPU comes with 4 command decoders and 8 execution units and has a total 11-stage integer pipeline (Fig. 1). In the frontend, Arm has built a new unit for jump prediction and instruction loading (fetch) called "predict-directed fetch" because the jump prediction feeds data directly into the command fetch unit. This is a new approach that results in higher data throughput and lower power consumption.

Like the Cortex-A76, the jump predictor uses a hybrid indirect predictor. The predictor is decoupled from the fetch unit and its most important structures work independently from the rest of the CPU. It is supported by 3-stage branch-target caches: a nanoBTB with 16 entries, a microBTB with 64 entries and a main BTB with 6 k entries. The hit rate regarding correctly predicted branches in the program flow is said to have increased again compared to its predecessor.

The branching unit can process eight 16-bit instructions per clock cycle, which lead to a fetch queue before loading a command. This queue contains 12 blocks. The fetch unit itself only works with half the data throughput, i.e. a maximum of four 16-bit instructions are loaded per clock cycle. In the event of an incorrectly predicted branch, this architecture can hide it from the rest of the pipeline without blocking the fetch unit and the rest of the CPU.

Even if the N1 has an 11-stage pipeline, latency is reduced to that of a 9-stage pipeline. How is this possible? In critical paths, the steps can overlap, such as between the second clock cycle of the jump prediction and the first cycle of the fetch operation. While nominally 4 pipeline steps have to be processed, the actual latency time is only 3 cycles due to the overlap. The decoding and register renaming blocks can process 4 commands per clock cycle, in the decoding unit 2 clock cycles are required.

At the output of the decoders there are so-called macro ops, which are on average 1.07 times larger than the original commands. Register renaming is performed separately for integer/ASIMD/flag operations in separate units, which are cut off from the clock supply with clock gating when they are not needed. This leads to enormous energy savings. If the decoding of the N1 requires two clock cycles, the register renaming has been shortened from 2 to 1 clock cycle compared to e.g. the Cortex-A73/A75. The macro ops are extended with an average ratio of 1.2 per instruction to micro ops, at the end of the stage up to 8 micro ops are output per clock cycle.

The commit buffer size of the N1 is 128 entries, whereby the buffer is divided into two structures for command management and register recovery - poorly called "hybrid commit system".

The execution units

The integer part contains 6 queues and execution units, specifically two load/storage units with decoupled address generation and data path, a branch pipeline, two ALUs capable of performing simple arithmetic operations, and a complex pipeline for multiplication, division, and CRC operations. The three integer pipelines and the branch pipeline are served by 16 entry queues, while the two load/storage units are fed by two 12 entry queues. All queues comprise 3 pipeline stages, with the first stage overlapping with the register renaming or micro-ops dispatch. Thus, a further clock cycle was saved in total, so that the total latency is only 9 instead of 11 clock cycles. The part responsible for floating point and 128-bit vector operations (ASIMD) contains two pipelines that are served by two queues containing 16 entries. Both vector pipelines can process 128-bit data. Using floating-point numbers with half accuracy and 8-bit scalar products, the benchmark DeepBench (an open source benchmarking tool that measures the performance of basic operations performed in training deep neural networks such as matrix multiplications) increased the score by a factor of 4.7 over a Cortex-A72 score.

What about the backend with command throughput and latency? On the integer side, the N1 reduces multiplication and MAC operations to 2 clock cycles. The much larger and more important improvements can be found in the vector pipelines responsible for floating point and ASIMD. For floating-point arithmetic, the latency is 2 clock cycles and for MAC commands 4 clock cycles.

Loading and Saving Data

The cache architecture of the N1 has been optimized for infrastructure workloads that have large data structures and usually many branches in the code. The two pipelines for loading and storing data each have their own queues with 16 entries. The data cache is fixed at 64 KB and is 4-fold associative. The latency time is 4 clock cycles. The I-TLBs/D-TLBs with 48 entries operate a separate pipeline. Like the Cortex-A76, the N1 got a completely redesigned prefetcher with 4 different prefetching engines that run in parallel and look at different data patterns and load data into the caches. The 64 KB L1 command cache is the first arm CPU on the N1 to be coherent, accelerating the setup/deletion of virtual machines in hypervisor virtualized environments. It reads up to 32 bytes/clock cycle, as does the L1 data cache in both directions. The L1 is a writeback cache.

The 8-fold associative L2 cache per core is configurable in 512 KB or 1 MB size, twice the size of the Cortex-A76, its latency for read access is between 9 and 11 clock cycles. A L2-TLB with 1280 entries offers so-called page aggregation, in which four small memory pages of 4 KB can be combined by the microarchitecture in 16 KB, 16 KB in 64 KB and 64 KB in 256 KB.

All cores share a system level cache (SLC) with any hardware accelerators and I/Os below the L2 caches, which, e.g. in a 64 MB configuration in 32 memory banks and a 64 core configuration, enables an average loading time of 22 ns for the data. It is connected via CoreLink CMN-600, a coherent mesh network according to the AMBA-5-CHI specification, which can contain up to 32 coherent CHI slave interfaces.

The entire memory architecture has been optimized by Arm for low latency, high bandwidth and scalability, but especially for extremely high parallelization in memory access. In the end, 68 in-flight loading operations and 72 in-flight memory operations are enabled.

The power consumption of an N1 CPU including 512 KB L2 cache is only 1.0 or 1.8 W (at 2.6 or 3.1 GHz clock frequency and a core supply voltage of 0.75 V or 1.0 V). The silicon area in TSMC's 7 nm implementation per core and 512 KB or 1 MB L2 cache is only 1.2 or 1.4 mm2.

Platform for infrastructure applications

Of course, a suitable CPU is not sufficient to meet the requirements of the infrastructure sector. Rather, the entire platform must fit.

An Activity Monitoring Unit (AMU) for each core controls the prioritization of the dynamic adaptation of supply voltage and clock frequency (DVFS). Power virus scenarios are also actively combated. A power virus is a computer program that executes a specific machine code to achieve maximum CPU power consumption. Computer cooling systems are designed to derive performance up to Thermal Design Performance (TDP) rather than maximum performance, and a power virus could overheat the system if it has no logic to stop the processor. The Neoverse N1 uses hardware throttling to lower operating conditions to TDP levels in a power virus scenario. For non-virus workloads, this has negligible effects. The power supply of the SoC must therefore only be designed for TDP conditions and not for virus conditions.

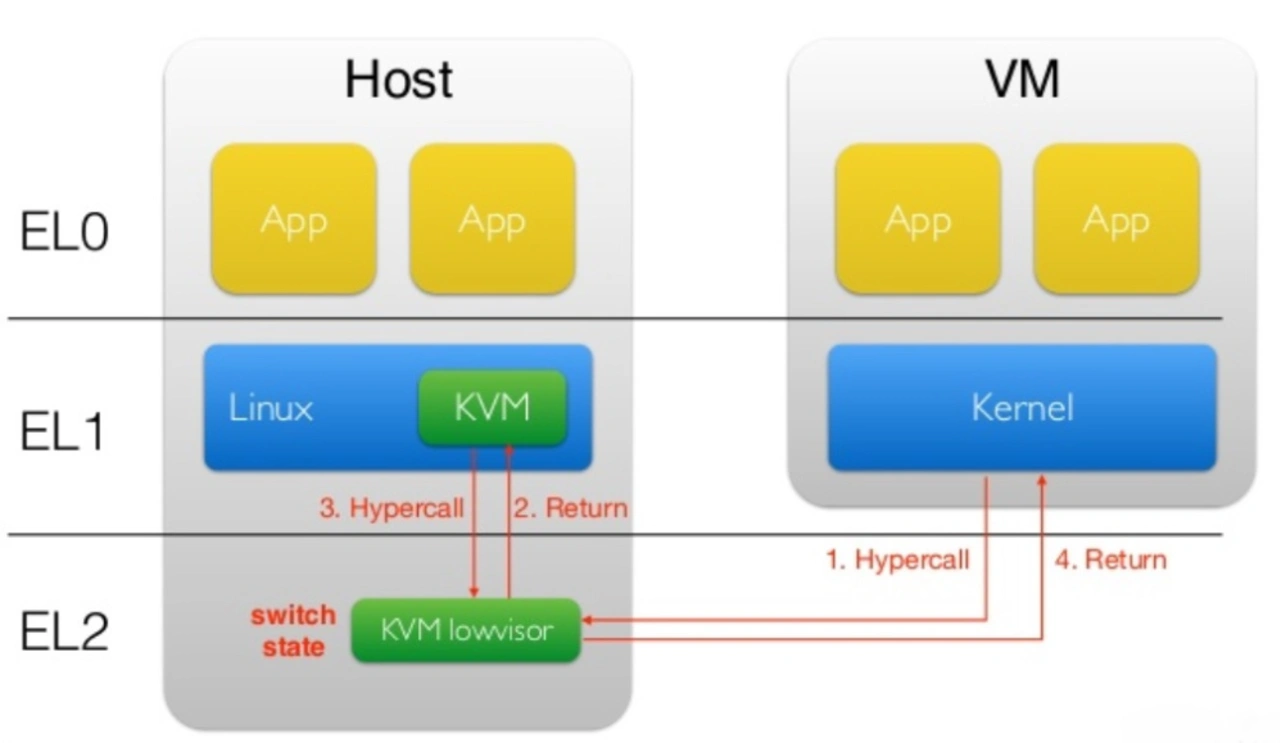

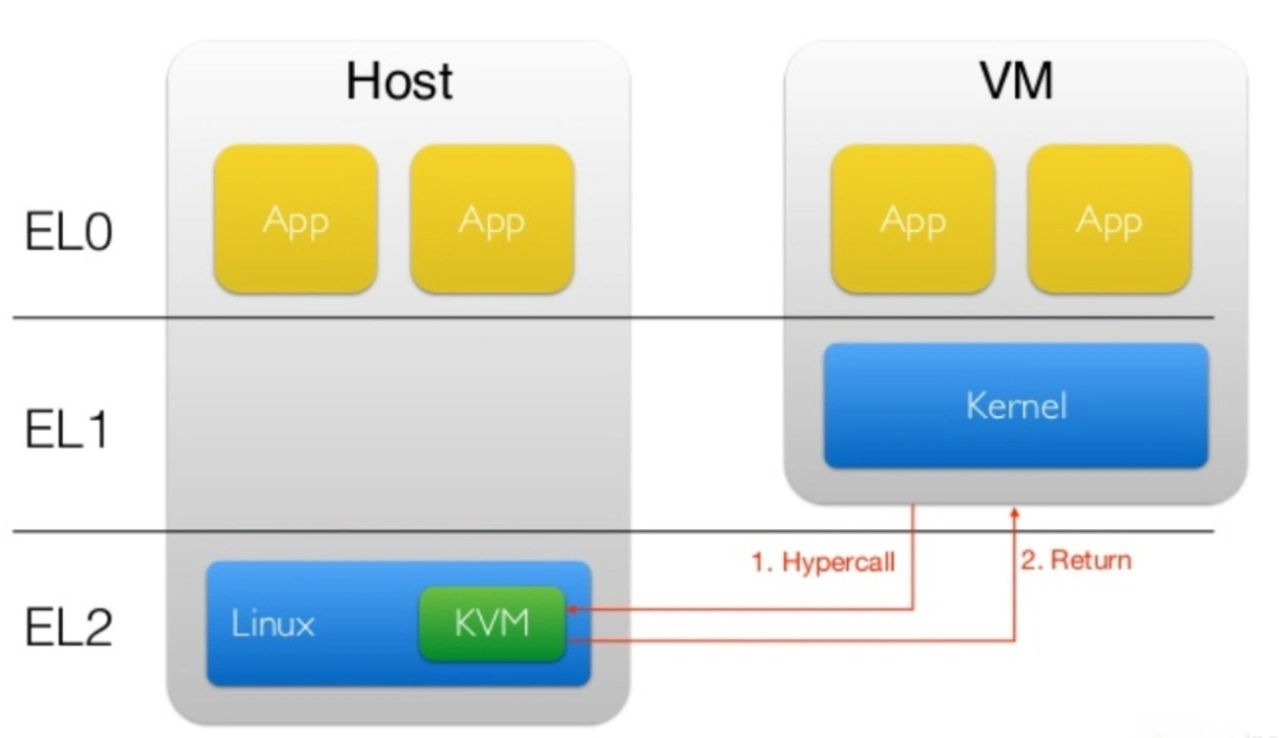

Another important feature is the Armv8.2 Virtual Host Extensions (VHE). They not only allow scaling up to 64 K virtual machines, but above all the unmodified operation of a host operating system in Exeption Level 2 (EL 2). Figure 2 shows a conventional scenario when using a type 2 hypervisor such as KVM with a host OS Linux in EL 1. EL 2 runs the so-called KVM LowVisor, which receives calls both from the VMs and from the KVM located in EL1. Using the VHE, it is possible to run the host OS Linux as well as KVM completely in EL 2 (Fig. 3), whereby the cross EL calls between KVM and the LowVisor with corresponding latencies become simple function calls within an EL. Since the EL1/EL2 transitions between VMs and host cannot be avoided, Arm has minimized overhead and optimized the MMU for virtualized nested paging.

Of course, RAS support is also required. The Armv8.2 RAS architecture not only provides ECC error detection (2 errors) and correction (1 error) using Hamming codes in all caches, but also allows the system to continue running without errors until the corrupted data is actually used.

Software analysis per hardware

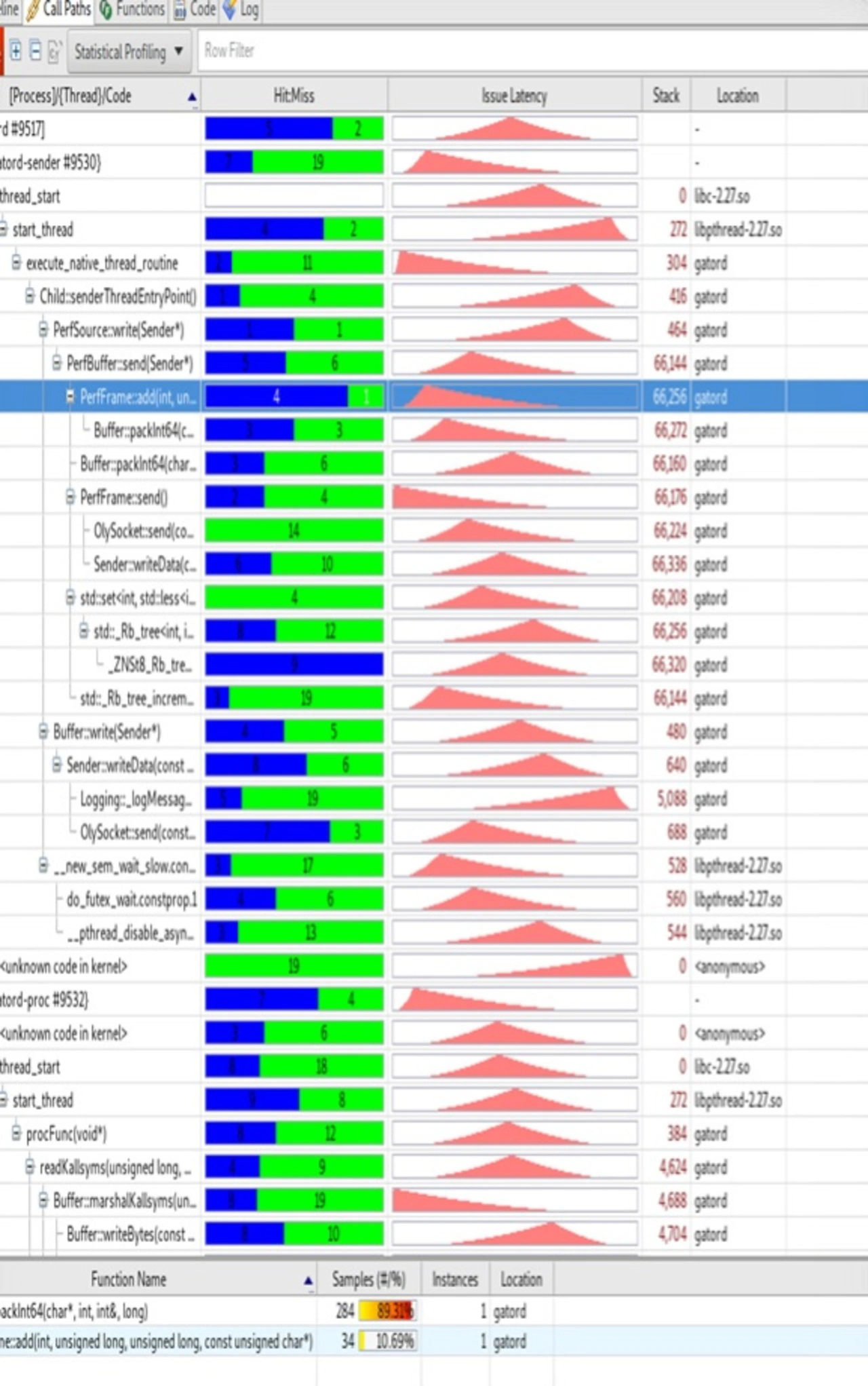

A world novelty in the arm universe are the statistical profiling extensions (SPE). They can be used to log detailed information such as jump prediction, cache hit/miss rate, or latency when reading data to help the developer optimize his software (Figure 4). The information is written directly to memory.

To scale to systems with 128 CPUs (or more, there is no fundamental limitation), Arm, like Intel, uses a network of double crosses (Mesh, #) that provides very low latencies and high bandwidth while connecting processor cores, caches, memory controllers, and I/O components. The shorter because often direct paths, which are also more diverse and can change direction at any time via intersections, eliminate many of the problems expected with other topologies such as the Cortex-A72 ring bus. Each node in the network is responsible for the communication in the network, there are no dead ends. It also helps scalability to even more processor cores without falling into large penalty periods for latency.

As caches grow larger, the mesh network is also superior, since data can be accessed faster over shorter distances, even to those that are not directly adjacent to the core. This helps to address, among other things, the system level cache as an actually scattered solution over the entire die as a unified solution. This in turn has further consequences for access to the memory controller and the I/O interface if data has to be accessed from it. The SLC has a bandwidth of more than 1 TB/s.

The coherence of the command cache implemented for the first time as mentioned above reduces data traffic dramatically, especially in large installations, because when a VM is restarted from a secured state, the contents of the command cache no longer have to be declared invalid. Helpful for synchronizing multi-core systems are the atomic instructions introduced with Armv8.1 and system-level cache stashing to preload the CPU caches before the data is requested. SmartNICs - a network interface card with its own processor that outsources processing tasks that the system CPU would normally perform, such as encryption and decryption, firewall, TCP/IP, and HTTP processing, making it ideal for high traffic web servers - allows further scaling.

Impact on performance

As mentioned at the beginning, the computing power of the http server NGINX increased by a factor of 2.5, which is due to 2x fewer L1 cache misses, 3x fewer TLB misses and 7x fewer false jump predictions. The 1.7-fold performance in Java-based benchmarks is due to a factor 2.4 faster object/memory allocation, a factor 5 faster object/array initialization and a factor 1.6 faster copy char function. The cache misses and jump predictions were reduced by a factor of 1.4 and the number of L2 accesses by a factor of 2.25 thanks to the optimized cache architecture.

For Memcached, the number of jump predictions was reduced by a factor of 3, while the hit rate for the L1 cache increased by a factor of 2.

The Neoverse N1 CPU is poor in 64-128-core configurations in hyperscale data centers (150W or higher power budget), 16-64-core configurations in edge computing (35-105W power budget), and 8-32-core configurations in network storage security (25-65W power budget).

Disclosure: DESIGN&ELEKTRONIK participated in the TechDays in San Jose at the invitation of Arm, the travel expenses were paid in full by Arm. Our reporting is not influenced by this and remains neutral and critical as usual. The article, like all the others on our portal, is written independently and is not subject to any specifications by third parties.

- For Cloud and Infrastructure: This is Arms Neoverse Universe

- The Neoverse-E1-CPU

- Neoverse Reference Designs

- Neoverse-N1 Hyperscale-Reference Design

- Neoverse Development Board

- Conclusion