Software

Managing the Risk of Adopting Third Party Code

Fortsetzung des Artikels von Teil 1

Security

Now that connectivity is a commonplace requirement in addition to failures that result in safety issues, a growing concern – especially with connected products now common place across many industries – is security issues. Unfortunately, these have become common place with data breaches of system hacking being reported in the media on an almost daily basis. Privacy breaches and data leaks can attract large government fines, negatively impact the reputation of the enterprise, and may result in litigation and costly damages to offset and remediate the repercussions on end users.

For example, OpenSLL is used by most internet users and website software. The Heartbleed security issue found in the OpenSLL cryptographic library led to data breaches as disclosed in 2014 [1]. If any such vulnerabilities are found in safety critical systems, then security issues can also compromise safety.

Techniques for Integrating third Party Software

There are proven techniques that can be applied to mitigate these risks. Functional safety standards provide guidelines for the use of third party software, also referred to as software of unknown provenance (SOUP). For example, IEC 62304 Medical device software – Software life cycle processes, (IEC, 2006) details the actions that are to be taken if third party software is to be used that is not of proven pedigree (perhaps because details of the software development process used are unavailable).

ISO 26262 also suggests that “a set of measures can be:

- availability of the specification for the system to be integrated;

- evidence that the system to be integrated complies with its requirements by test report;

- structured design analysis for systematic design faults by FMEA, FTA, application of established design pattern/configurations;

- evidence that the system to be integrated is suitable for its intended use;

- design verification/validation testing by highly accelerated life testing, environmental testing, testing beyond specification limits, robustness testing; and

- analysis of field data".

Isolating Third Party Software

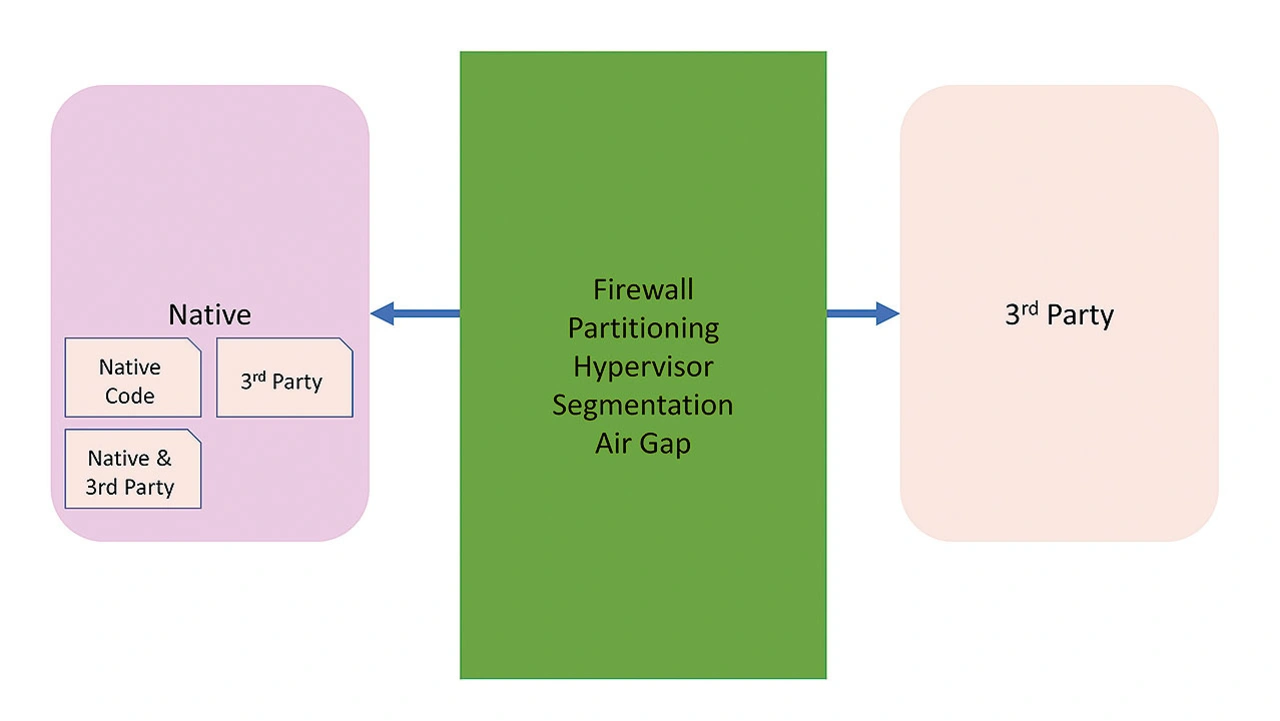

One approach to mitigate risks of integrating third party software is to isolate it from the rest of the code base. Isolation may be achieved by using a hypervisor or firewall, through partitioning and segmentation, or using a physical air gap.

Figure 1 provides an overview of the isolation approach. The third party software on the right side of the figure has been successfully isolated. As illustrated on the left side of the figure, there may be situations where native and open-source software reside in close proximity.

SOUP by Example

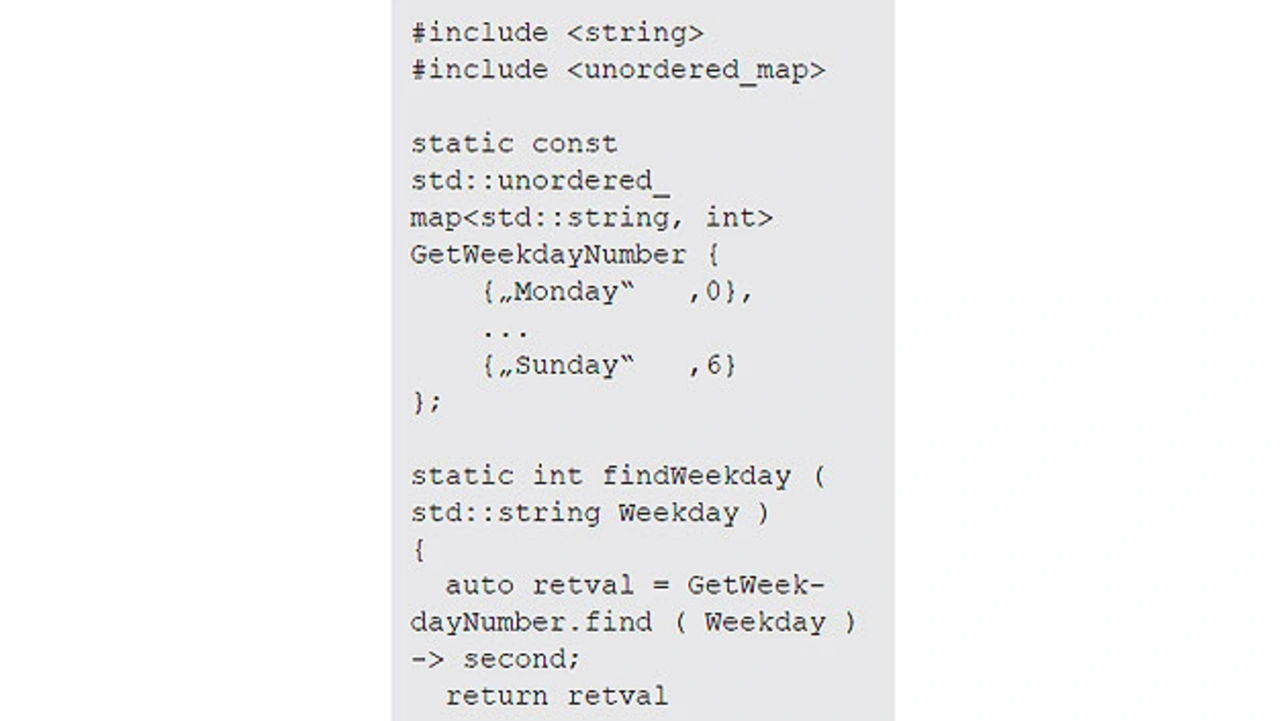

Consider the recommended software development lifecycle practices where native code includes references to external Standard Template Library header files (Listing).

The starting point here would be to derive a set of requirements for the findWeekday method, accompanied by a set of test cases used to validate the requirements and to check the robustness of the code when dealing with boundary cases.

Any failed test cases are then analyzed, the code checked to ensure that it is not compromised, and the requirements are supplemented and amended to reflect the improved understanding of the code. With the SOUP requirements understood, test cases to validate these requirements can be constructed and any further failures addressed in an iterative manner.

The process is straightforward for such a small example, but the application of the SOUP methodology is potentially a time-consuming activity. It depends on the extent to which its provenance can be proven.

The STL Library is an example of where the SOUP methodology is practical because it is provided by the GNU GCC project which uses documented planning, configuration and change control processes, has a documented requirements specification, and has associated maintained test suites that can be executed by the integrator.

If the practices are carefully reviewed and understood to be appropriate by the integrator, then an argument can be made for trusting the STL library and concluding the software is fit for purpose. In cases where the risk of failure is critical, or the behavior of the third party software is not documented or clear, extra confidence may be obtained by applying the SOUP methodology.

Design and Code Review

Design and code reviews represent another established technique that can identify weaknesses in architectural design and source code. When available, it is recommended to check third party provided source code for known coding issues. Coding guidelines and coding issue databases are readily accessible and automatable.

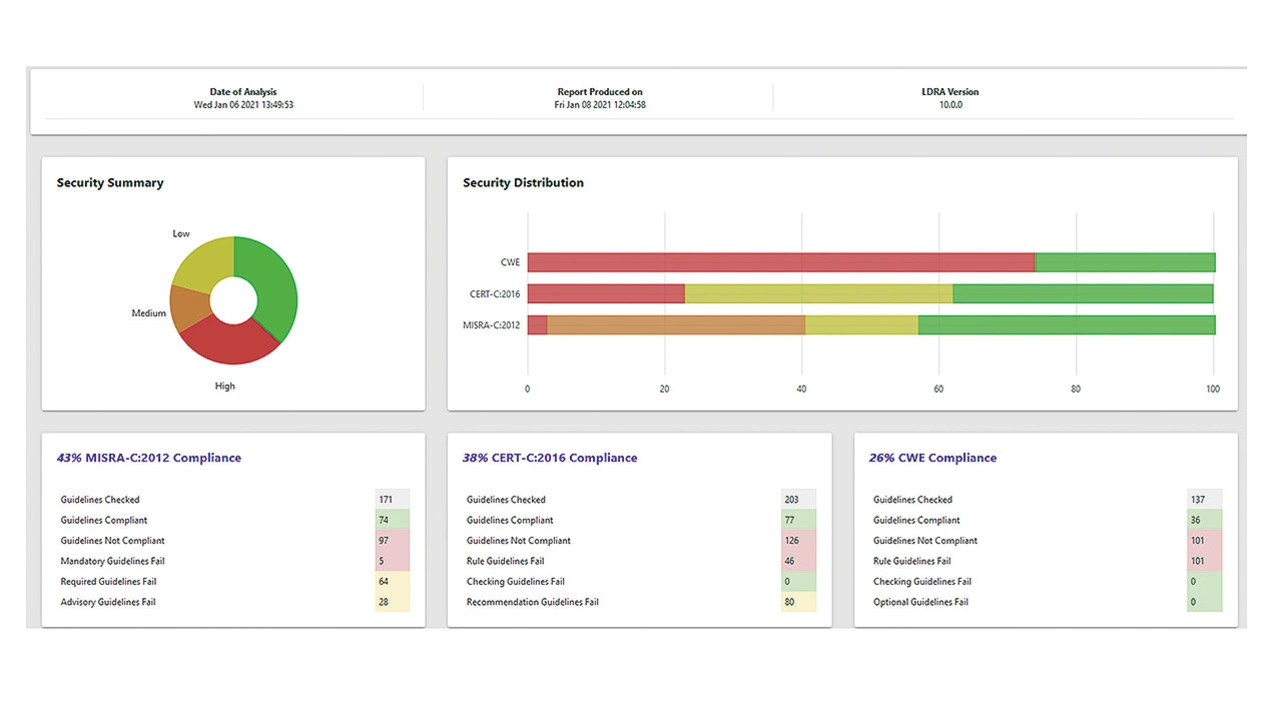

Figure 2 shows a typical output of a C code analysis tool measuring compliance with various coding standards.

When source code is not available, for example if COTS software is delivered in a system image format, an alternative is to use the Compliance Guidance within MISRA Compliance:2020. Following this guidance, third party software suppliers can provide evidence that their software development process is of the highest quality.

When to integrate third party code?

One popular approach to minimizing integration effort is to perform the integration once and then “freeze” the codebase so that no changes can be made for the duration of the project. Each release of software development process is then considered a “certified” version of the software in accordance with ISO 26262, for example. If the version of the third party software is updated, then validation and verification processes must be repeated using the new code base.

There are some pitfalls, the most notable being that it may not be valid to assume that changes to the third party software will never be required. For example, any security related system must assume that a breach will occur and have effective remediation methods planned. These are likely to involve code changes.

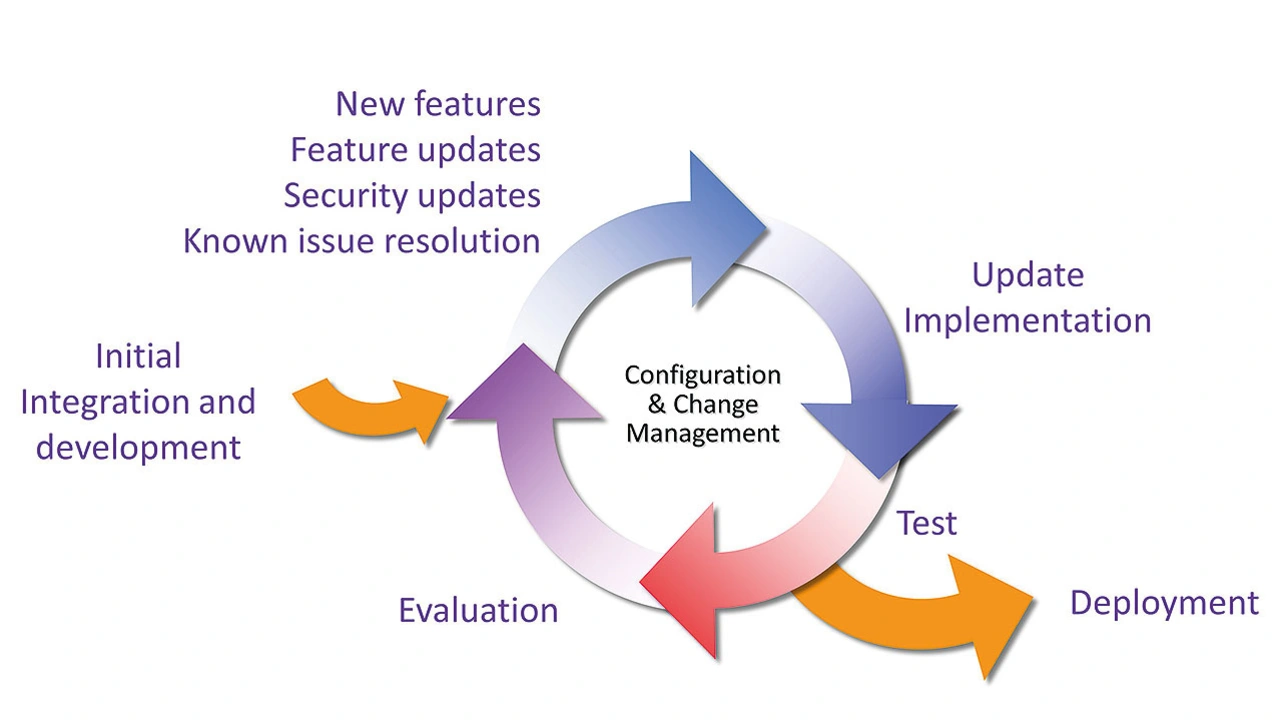

Another approach that is becoming a best practice in some industries is to integrate third party software frequently, taking advantage of the latest features and security updates. Figure 3 presents a typical third party software integration process within a wider iterative software development process.

IEC 62304 suggests that Medical Device Software satisfy requirements and include functional capability to realize “compatibility with upgrades or multiple SOUP or other device versions”.

Literature

[1] USN-2165-1: OpenSSL vulnerabilities. Canonical, 7 April 2014, https://ubuntu.com/security/notices/USN-2165-1.