Ultra-Low-Power-Processor with RISC-V

IoT-Processor for AI Applications

RISC-V allows to extend the instruction set. With special instructions for vector processing, high computing power can be realized, as required for neural networks. Combined with dynamic energy management, this results in a AI IoT processor for battery operation.

In 2016 we started to be interested in what would happen if IoT sensors and devices started to process rich signals such as sounds, vibrations and images. At the time IoT sensors generally produced low amounts of data: temperature or humidity readings or even just the state of a digital signal.

The first deployments of IoT sensors had underlined the importance of battery operation. Without a very long life on a battery, the deployment and operational costs of a sensor quickly destroyed many possible business cases. To support this need several ‘low power wide-area network’ technologies were emerging such as Sigfox, LoRa and NB-IoT.

These networks traded off network bandwidth and latency for large cell size and low energy requirements at the sensor. Looking at these networks we realized that they were fundamentally incompatible with transporting rich signals. To connect sensors processing rich signals to these networks would require large quantities of information to be processed directly in the sensing device and to preserve battery operation this would have to be done at energy levels that were not achievable with available processing devices (low power MCUs).

Computational agility

Looking at the type of processing that would need to be done we realized that there was a large scope. For example, to process a sound or vibration a significant proportion of the compute load would be in signal processing, noise reduction, echo cancelation, feature extraction and even beam-forming in multi-microphone setups.

Only a small portion of the compute load would actually be in the inference operation. Combining multiple signal types, audio plus image, would require even more computational agility in the processor. Another thing that was clear was that most of the algorithms exhibited some degree of parallelism, some extremely parallelizable some less.

Power agility

At the same time, IoT devices spend most of their time waiting for an external or timed event, doing nothing. When the event occurs the device must wake up as fast as possible, do an appropriate amount of processing and get to sleep as fast as possible. In the audio example above, waking up too slowly means missing the first part of a sound degrading the ability to recognize it.

In some cases when waking up, the processing activity may be low, network maintenance for example, and in some cases, the processing load will be significant, when a signal needs to be processed. The time spent ‘transitioning’ between these power states and appropriately and quickly scaling energy usage would be determinant in the overall energy efficiency of the device.

With these observations in hand, we saw that we would need a processor that had some of the attributes of an MCU, fast power state changes, extremely low standby consumption but that could, when needed, achieve a compute capability in line with a multi-core high-end application processor.

To achieve this high-end computational capability we needed to exploit the parallel nature of the workload but to do so in a way that was extremely fine-grained, allowing large speedup factors even for »difficult« algorithms. Finally, power transitions needed to be ultra-fast to make sure that energy was not wasted when moving between different states.

In February 2018 GreenWaves Technologies announced GAP8, an IoT Application Processor designed to respond to these challenges. GAP8’s heritage is strongly linked to the Parallel Ultra Low Power project out of ETH Zurich and The University of Bologna which Eric Flamand, GreenWaves’ CTO, was one of the founding members.

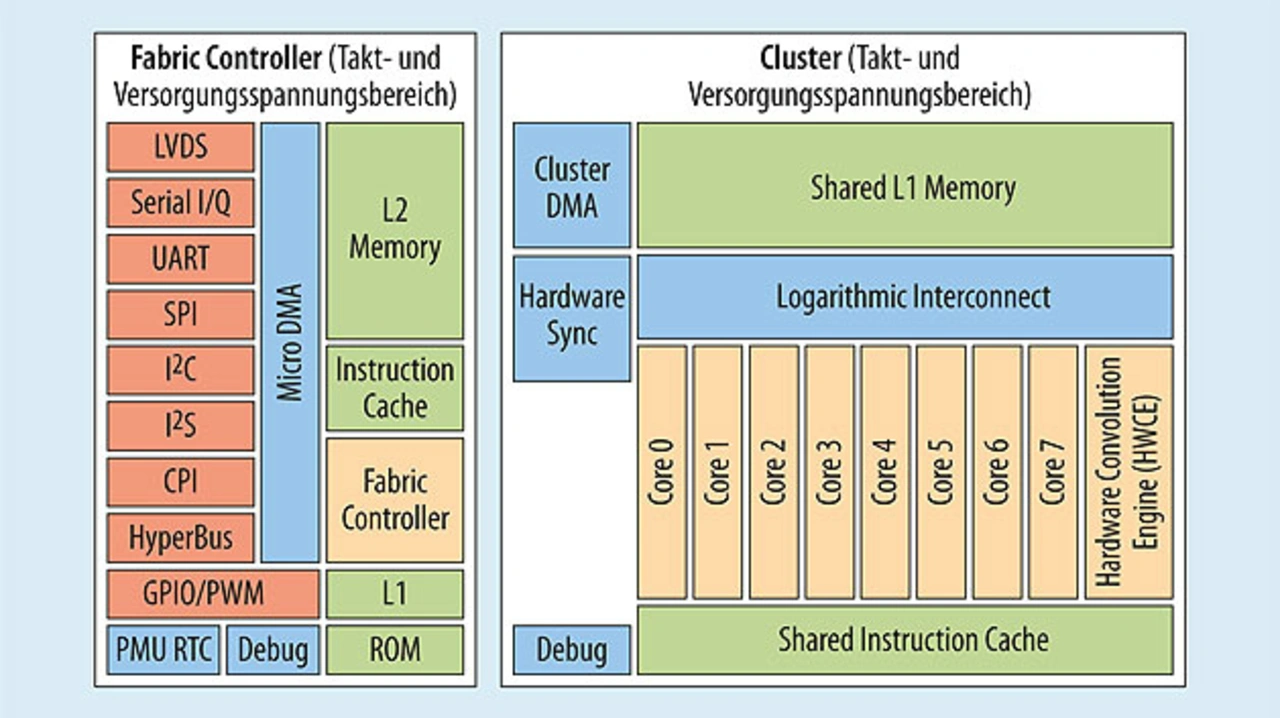

PULP provides a solid research foundation on which our work is based. The PULP project has produced multiple different open-source RISC-V core designs and several generations of test chips. GAP8 is not just a revolutionary device from a technology perspective, it is also one of the first attempts in the world to bring the benefits of open source to a semiconductor product. (Figure 1).

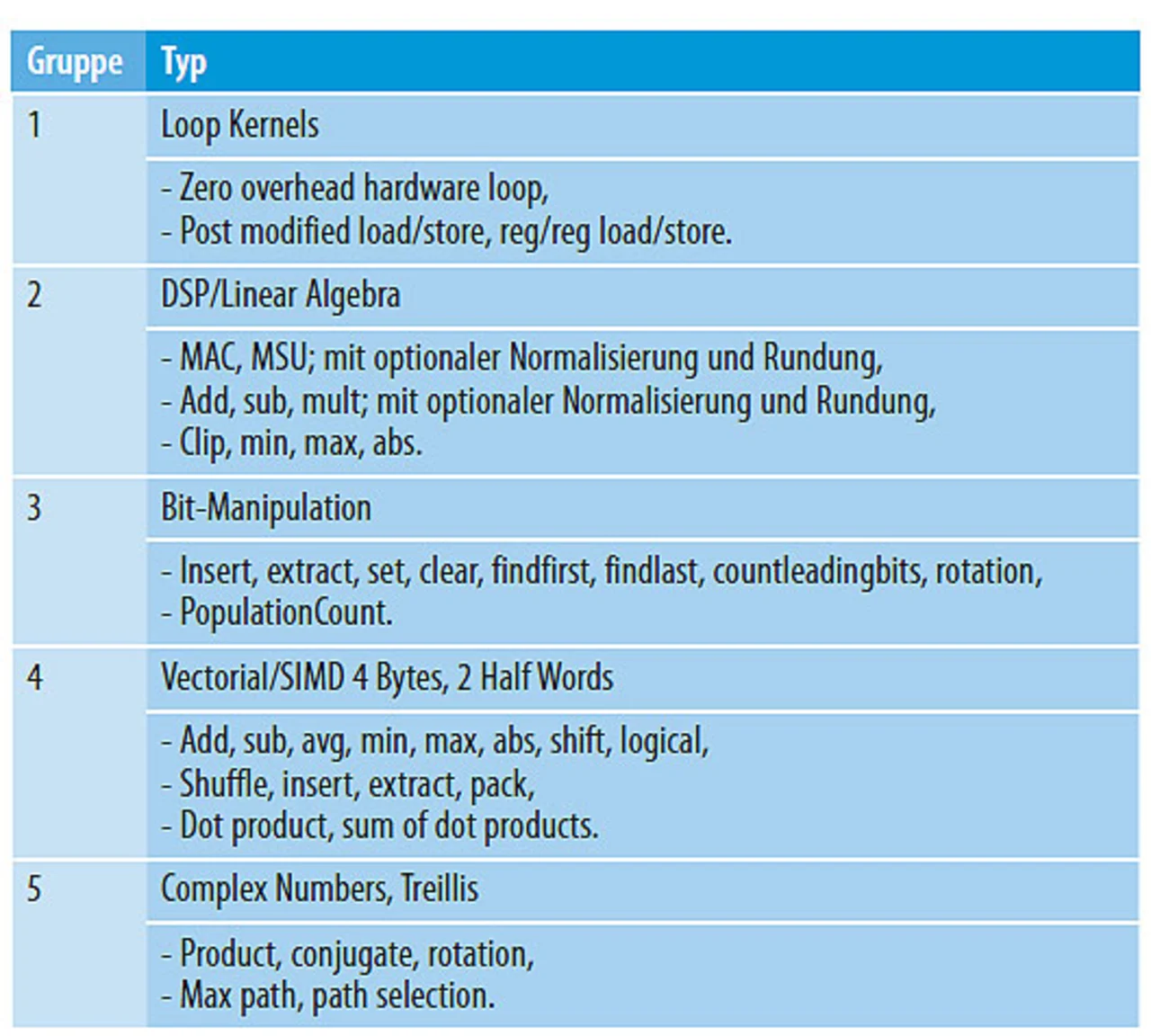

GAP8 is an 8 + 1 core MCU class processor. All of the cores implement the RISC-V RV32-IMC Instruction Set Architecture (ISA). We have used the custom extension capability of the RISC-V ISA to add a series of instructions focused to further optimize our ability on signal processing tasks: Operations such as zero overhead hardware loops, DSP/linear algebra operations, bit manipulation and, vector/SIMD extensions for 8 and 16 bit fixed point numbers increase our instruction level processing capability with a modest increase in gate count (Table).

One of the RISC-V cores is used as classic MCU, which we call the fabric controller (FC), for acquisition, control and communications and eight are organized as a parallel computing cluster. The FC and cluster operate in separate frequency and voltage (DFVS) domains allowing clock frequency and voltage to be dynamically tuned to match the current workload. All power management and RTC are on-chip increasing GAP8’s agility when switching between power states and ensuring ultra-low standby current.

The cluster incorporates 8 RISC-V cores, identical to the FC, but attached to a shared L1 memory area with a logarithmic interconnect delivering single cycle access to the memory 98% of the time.

All task dispatch, join and inter-core synchronization is carried out in hardware. A task can be forked and running on all cores in 3 to 4 cycles. As soon as a core has finished an operation it calls a barrier, implemented as a read to the cluster hardware synchronization engine that returns when the core wakes.

The core is clock gated the next cycle consuming only leakage. As soon as all cores hit the barrier the next cycle all the cores are running again. This extremely low overhead parallelism achieves our goal of enabling good speedup performance on algorithms where the parallel portion of the code can go down to only 50 cycles.

A shared instruction cache between the cluster cores limits instruction fetches from memory exploiting the fact that the cores will be running the same kernel on different data. The cluster also includes a generalized interface for dedicated accelerators which address the L1 memory identically to the cores.

GAP8 includes a specialized convolution accelerator capable of a single 5 × 5 convolution with accumulation on 16-bit weights and activations into a 32-bit output value in a single clock cycle.

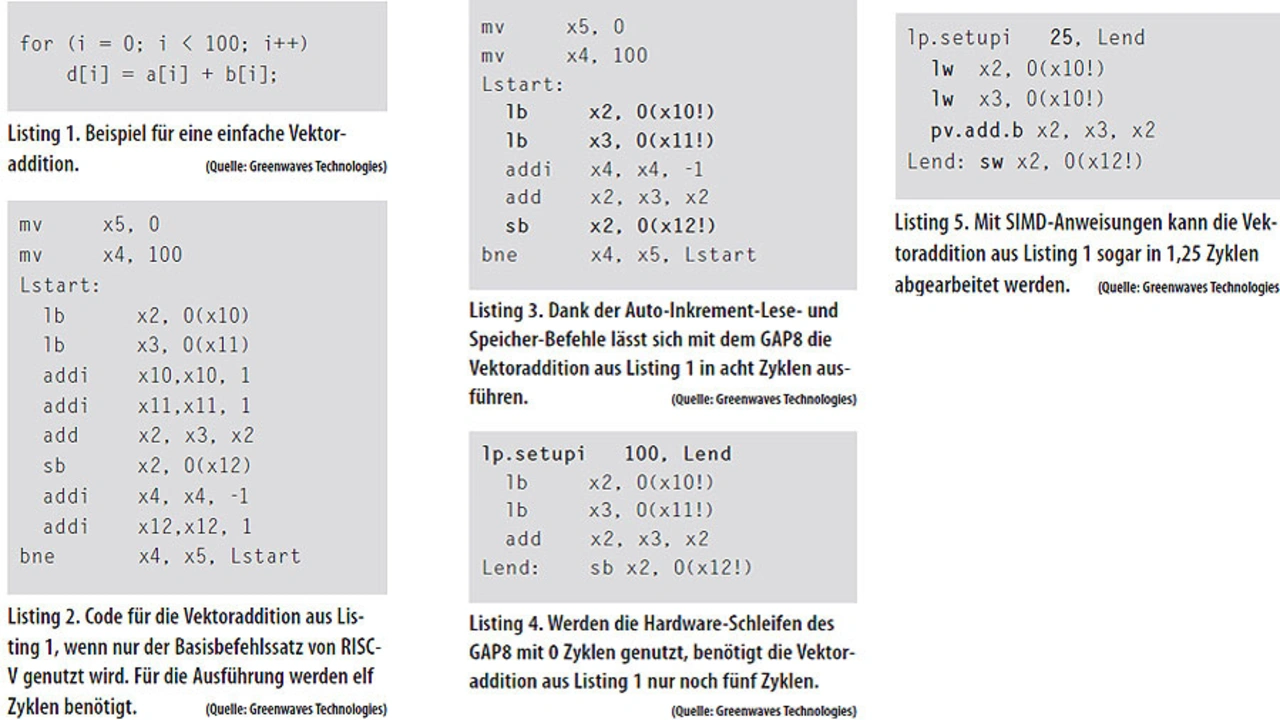

As a demonstration of the speedup effect of the ISA extensions and the cluster lets look at the simple implementation of an addition of 2 arrays in the GAP8 cores (Listing 1).

A baseline implementation in the standard RISC-V ISA would yield the instructions shown in Listing 2. 11 cycles per output.

Using the GAP8 auto increment load and store instructions we reduce the number of cycles to 8 per output (Listing 3).

Using the zero cycle hardware loops we reduce to 5 cycles per output (Listing 4).

Finally using the packed SIMD instructions we reduce to 1.25 cycles per output (Listing 5). An order of magnitude improvement versus the native RISC-V implementation.

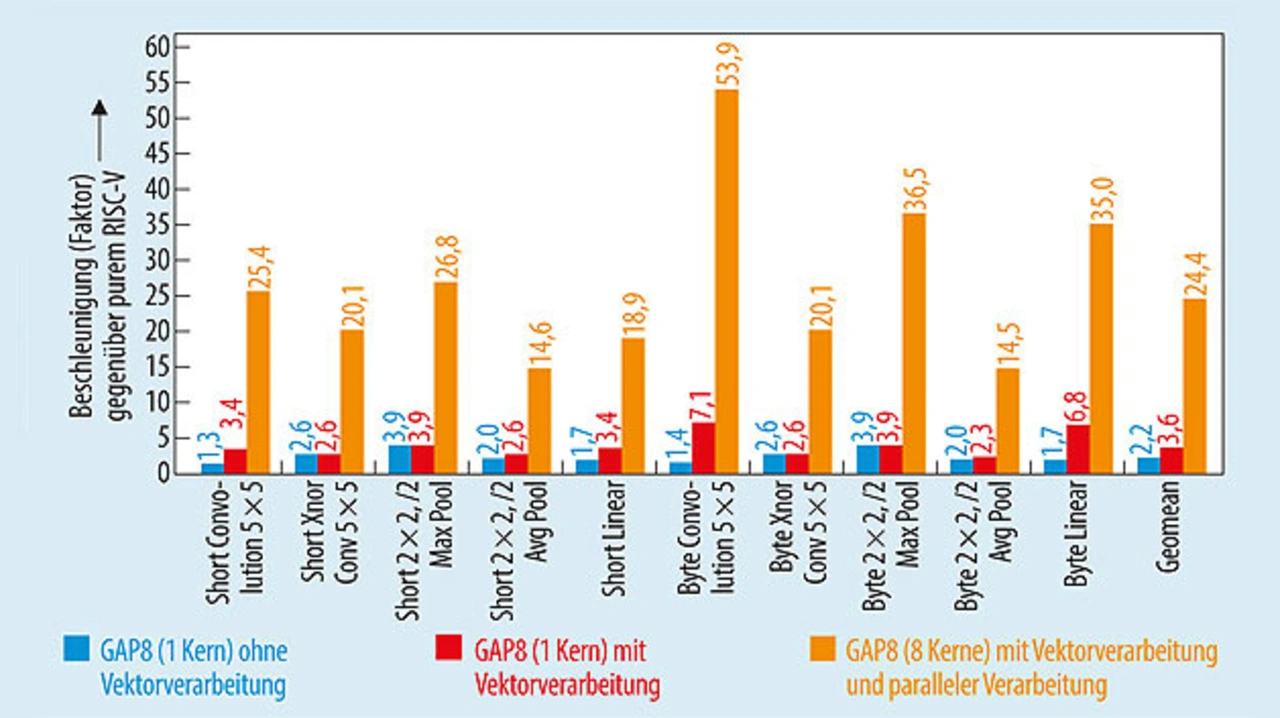

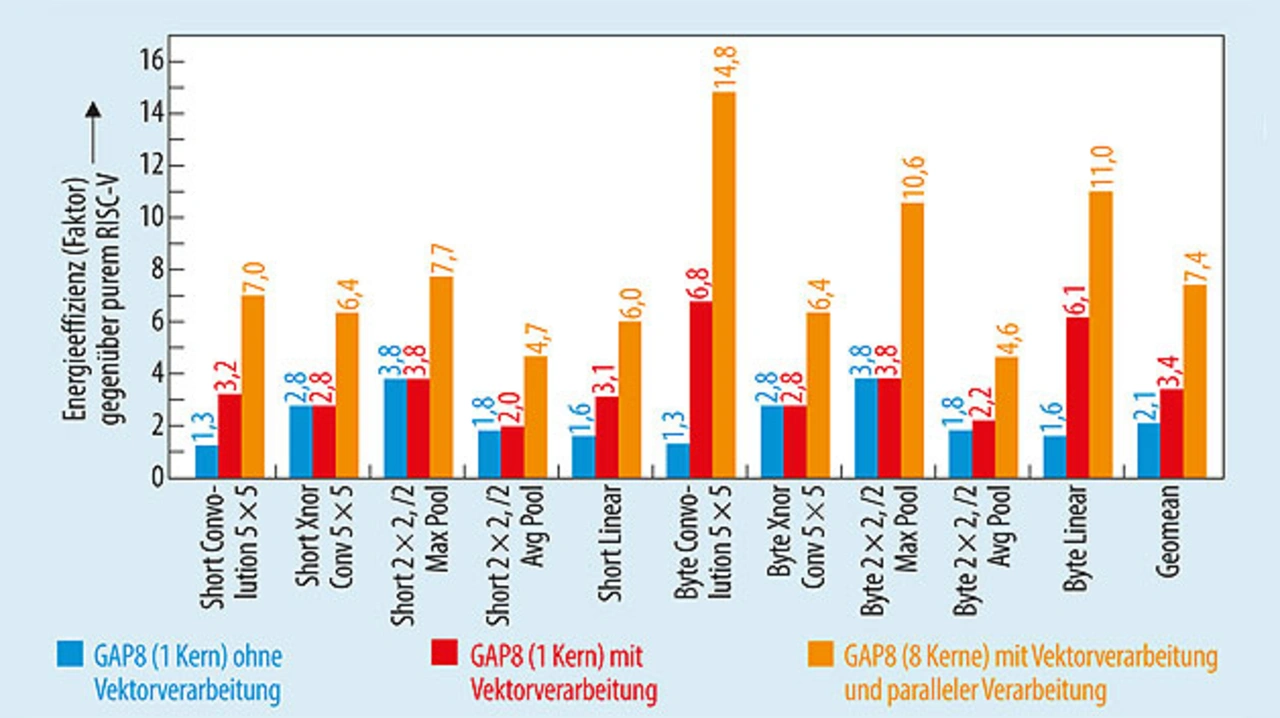

The ISA extension improvements are then combined with the parallel implementation across the cores in the cluster. The chart below shows the effect of the ISA extensions and parallel compute capability on a The same optimizations used on the simple vector addition example are used on a variety of different algorithms found in modern Deep and Convolutional Neural Networks.

The reduction in the number of executed instructions combined with architectural efficiencies such as the shared instruction cache leads to a power efficiency gain which is combined with the speedup delivering outstanding energy efficiency on this class of algorithms.

- IoT-Processor for AI Applications

- Toolchain, Security and Memory Management (with Video)