Voice Assistants for Smart Dialogs

»Hey Car, Listen!«

Like with Siri or other virtual assistants, drivers want to interact with their cars. For comfort and safety, automotive manufacturers, too, rely on voice assistants. Information-based ‘voice brokers’ help to overcome the special challenges of integrating voice-based dialogue systems.

In the smart home, more and more people communicate with digital voice assistants. This new type of device communication fuels expectations to also control the vehicle using voice commands. Shouldn’t it be comparatively easy to adapt voice control systems available on the market to the vehicle? Not at all. The tools of common voice assistants offer comparatively little flexibility in terms of developing convenient, complex in-vehicle voice interactions. In vehicles, clear, fast, safe, and secure communication is disproportionately more important – the goal is not to distract the driver. This virtually always requires extra programming work that can be very time consuming. Standard design tools therefore follow a rather simplified approach to dialog design. Many of them fail to consider dialog phenomena that would allow designers to create a fluent and target-oriented interaction. Examples include implicit confirmation, correction, or the in-context classification of ambiguity (disambiguation). As a result, such user-oriented dialog behavior has to be implemented manually. Furthermore, external assistants have only limited access to data that could make voice dialogs more targeted and easier. Information can be found everywhere in the vehicle, in the connected smartphone, or in the cloud. However, the items of information must be integrated individually into the voice dialog through complex programming.

Shortcomings of voice assistants

It is frustrating to have voice assistants that understand nothing at all. Speech recognition often delivers astonishingly good results. However, in most cases, it is not yet good enough to be truly trusted. This reduces the level of usage – voice control is only used for simple commands where the user is sure that they work right away: “Call Sam” or “Drive to Erlangen”.

Voice control goes awry when acoustic detection does not work or when you have a dialog flow that is too unnatural. The assistant often simply lacks information that would be needed to execute the command correctly: Where exactly in Erlangen does the user want to go? To work? Is there still enough gas in the tank? Are there any gas stations on the way to Erlangen? The voice assistant frequently lacks context and specific information about the car, its driver, the environment, and often even its own dialog history (e.g., recent conversational content). Most of today’s voice assistants are programmed for specific tasks and only few assistants have access to related information. The system knows that an address consists of city, street name, and building number. What is not known, in many cases, is that a gas station sells gas, snacks, and beverages. If drivers say ‘I’m thirsty’, they, instead of stopping at the next gas station and not losing much time, may have to take a detour to a supermarket.

The following sample dialog shows an interaction with different Alexa skills on a smartphone. These skills allow the system to access the vehicle’s location; however, its data such as the gas level or the required fuel type cannot be accessed.

User: Take me to the gas station.

System: Do you want the Aral gas station’s address?

User: Yes.

System: OK, retrieving directions. [The navigation app starts]

User: What are the gas prices?

System: I’m sorry, I didn’t get the fuel type you want.

User: What are the gas prices? How much is E10?

System: Super E10 is EUR 1.239 at the JET gas station, located at Dusseldorfer Straße 285, and EUR 1.239 at Supol.

Although Alexa, at this moment, has location-related information from the Aral gas station navigation data, the system forgets the information with the very next question that is in fact based on this information.

Customizing Voice Assistants

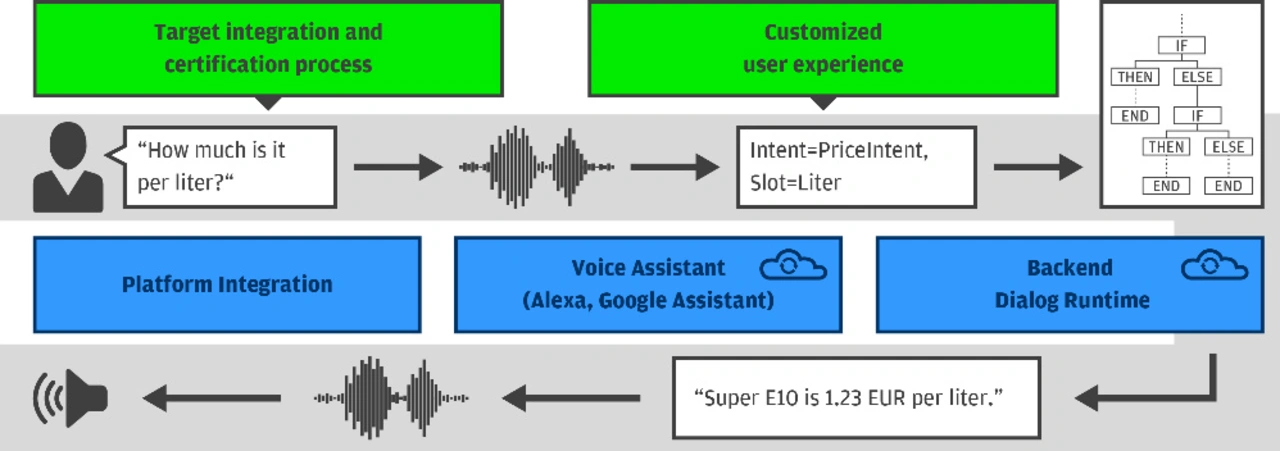

The capabilities of voice assistants can be expanded with server-based dialogue systems (Fig. 1). As already mentioned, the high-quality integration of a voice assistant for natural communication processes is complex. The first step is to integrate the assistant into the appropriate platform and connect it to the infotainment system. Through an abstraction layer, so-called brokers for voice assistants, different voice assistants can be integrated relatively easily or replaced and updated over the vehicle’s life. Different assistants that are integrated simultaneously but not necessarily simultaneously active make sense, for example, for different parts of the world. However, their functions can also overlap, for instance, to offer drivers their familiar living-room setting in the vehicle.

In a second step, the voice assistants’ functions can be extended. Additional capabilities for a certain task such as integrating the air-conditioning system and its control are called ‘skills’ in Alexa and ‘actions’ in Google Assistant. If the existing dialog capabilities are not sufficient for the desired new function, any custom dialog management can be implemented in the backend in a third step. It is based on the identified user intent and its parameters (slots) and initiates follow-up questions or answers. At this point, it is possible to include data from the car or the cloud and keep this information even across skills and actions. Based on the description of knowledge and the linking of vehicle data, the generic approach for such a backend outlined below allows the generation of amazingly intelligent answers.

Types and combination of knowledge

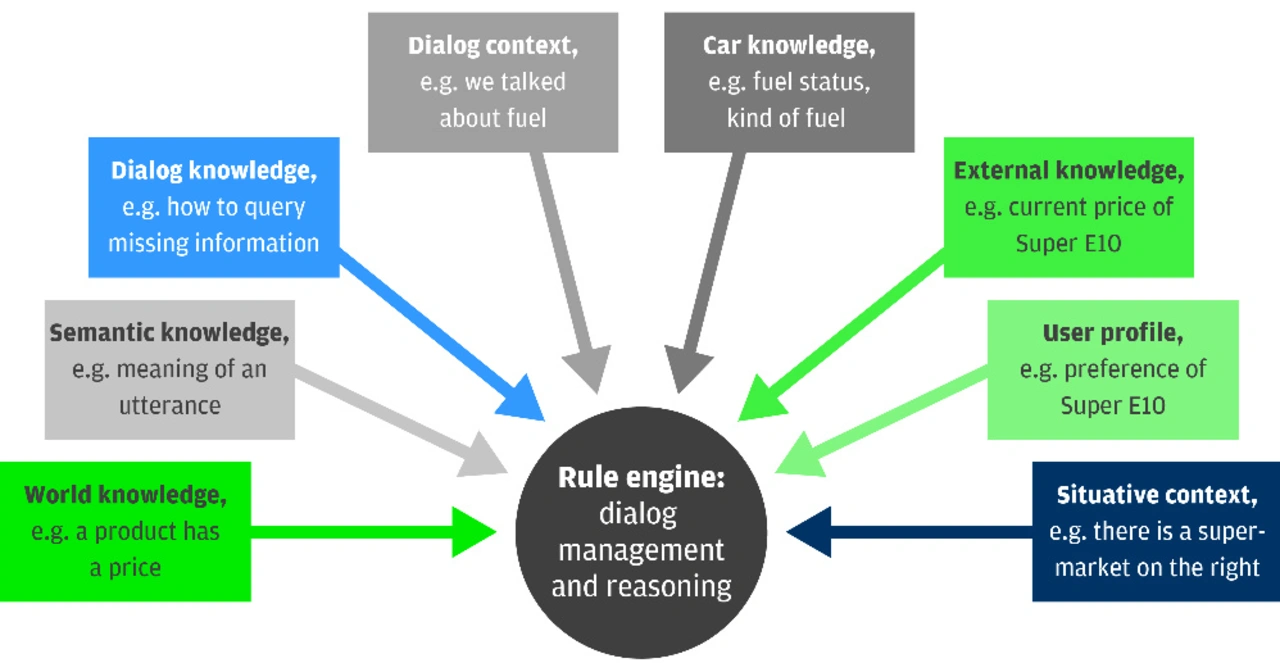

Knowledge is one of the most important keys to natural, task-oriented, and situational dialogs. Comprehensive information helps voice assistants analyze complex issues and draw intelligent conclusions. One example is the answer to the question ‘How much is it?’ that the system was asked in the above dialog. A generic approach to answering requires the following types of knowledge:

Semantic knowledge

The system must know what a linguistic utterance means and be able to assess its point. This is typically done statistically based on a collection of example sentences. ‘How much is it?’ refers to the price of a product.

Dialog knowledge

The system must be able to compute the dialog step to be performed next. In the context of gas, ‘How much is it?’ does not require a follow-up question; it demands a direct answer that provides the correct price.

Dialog context

In natural dialogs, the speaker often refers to the dialog history. ‘How much is it?’ contains the anaphor ‘it’ that, in a particular context, can be resolved, for example, to ‘gas’.

Car knowledge

Access to vehicle data is very useful. Aside from the gas level, e.g., the required fuel type could also be known.

User profile

The system can remember user preferences such as E5 or E10.

World knowledge

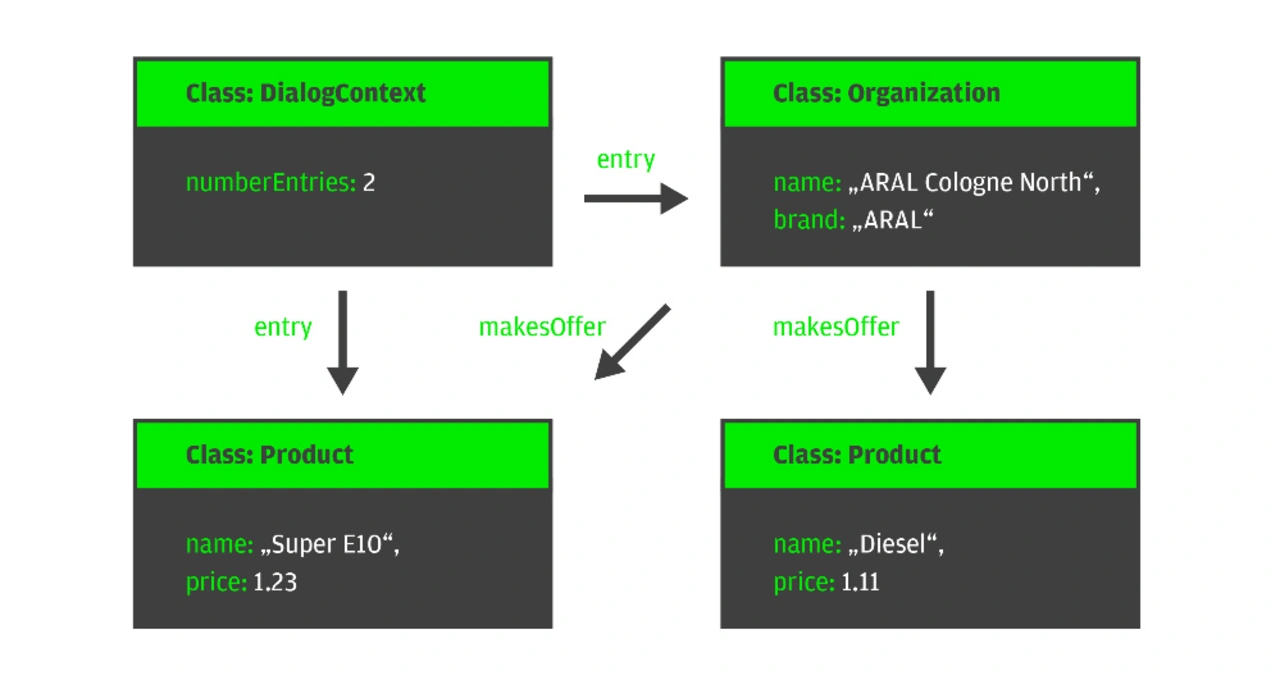

The assistant needs a description of all speech-relevant things – called classes – and their properties. In addition, it must understand the relationships between the class instances. In this case: A gas station is an organization; an organization sells products; each product has a price (Fig. 2).

External knowledge

Dynamic properties of classes can be integrated using a REST API; e.g., the current price of the product gas E10 at a gas station. External knowledge is also needed for questions like ‘Is the supermarket still open?’ or ‘What does the yellow warning light mean?’.

Situational context

Knowledge of the immediate surroundings can be obtained from the vehicle’s position or intrinsically by providing access to everything that is graphically visible. The system thus knows the supermarkets within a certain radius or a defect shown on the display.

Even if the required knowledge is available on a comprehensive scale, it can be processed automatically only when it is represented in a uniform way. Classes such as an organization, individual instances (e.g., Esso gas station Cologne North of the Organization type), and relations (e.g., ‘makesOffer’ to relate the gas station to specific products) are uniformly represented in the form of an ontology. This uniform description allows the system to draw automatic conclusions (‘reasoning’) and to generate useful responses (Fig. 3). Dialogs that consider the context correctly can be multimodal, outcome-driven, and natural. The user can focus fully on driving.

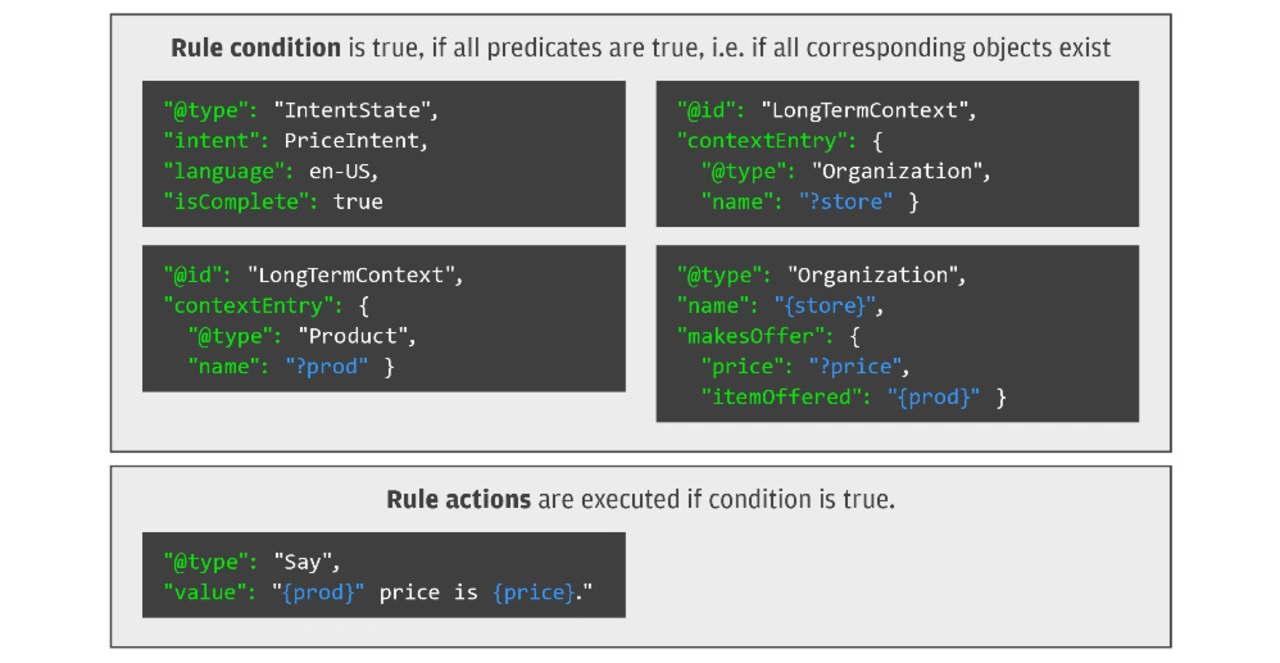

The main features of this approach are the following: description format, knowledge abstraction for needs-oriented downloading of external knowledge, integration of new knowledge sources, and a rule engine in the backend. This engine initiates and answers dialogs or asks follow-up questions using the ontology, the rules described in it, and the knowledge database (Fig. 4). These aspects are independent of the integrated voice assistant; as a result, the dialogs can be easily applied to one or more other assistants.

This information-based approach allows developers to expand voice assistants purely declaratively without programming and to develop custom functionalities and dialog behavior for different automotive brands. Appropriate tooling enables automated dialog testing even without any voice assistant.

Practical example: ‘How much is it?’

The following proactive dialog allows us to briefly outline the rule-based approach and the integration of knowledge.

System: You’ll have to stop at a gas station soon. Cologne is 50 km away, but you’ll run out of gas in 30 km.

Driver: Thank you. Where can I find a gas station?

System: The cheapest one near you is an Aral gas station 20 km away.

Driver: How much is it?

System: Super E10 there is EUR 1.23.

Driver: OK, take me there.

System: Got it. I’ve added the gas station as an interim destination.

We’ll be there at 6:40 p.m.

‘It’ and ‘there’ are two anaphors. ‘How much is it?’ can be generically resolved by the system using the information-based approach so that the correct answer is not only computed in the gas station context: The dialog backend (Figure 1) is started after the semantic classification process. The rule engine checks different rules. Each rule consists of conditions and actions. Very few rules necessitate the identified user intent: The ‘PriceIntent’ is checked in the first part of the condition (Figure 3).

The appropriate object was written to the knowledge database after recognition. Another condition to be checked is the existence of a specific organization in the database. The task of the organization (‘{store}’ variable for the name) is to offer a specific product (‘{prod}’ variable). Organization name (Aral) and product name (E10) are retrieved from the knowledge database using parallel conditions. This process checks which objects of the Organization/Product class were linked to the LongTermContext/User object in a previous dialog step. The ‘?store and ?prod’ syntax means that the variables are assigned appropriate values. Finally, the product’s price (?price) is resolved in the background by a plug-in component during the ‘organization existence check’. This component obtains prices for products of the Gas class. More services such as prices of other products are covered by additional plug-ins hidden behind the voice broker’s knowledge abstraction layer. Ultimately, all conditions can be checked as the objects exist in the knowledge database and the ?prod, ?store, and ?price variables are read. The action according to the rule is performed (object of the ‘Say’ class) and the answer ‘Super E10 there is EUR 1.23’ is generated.

Voice is the Future

Even if the design of today’s voice assistants does not necessarily meet the requirements for natural dialogs, voice control of the vehicle is desirable for many consumers. The functionality of external voice assistants available on the market can be expanded and improved by server-based dialogue systems to comply with the specific requirements in the vehicle. Convenient use, intelligence, and the natural functionality of voice assistants are important differentiators. Automotive manufacturers can benefit greatly from the rapidly evolving technology and quality of voice control and set themselves apart from the competition. As high-quality voice assistants come as standard in a number of luxury-class models, in-vehicle voice control systems can be expected to develop significantly and become more and more common. UH

Authors