Delivering, Updating, Operating Edge AI

“We provide the software environment for edge AI”

Embedded or Edge AI is becoming widespread in industry. But how can AI models—with the help of the cloud, if necessary—be deployed at the edge, kept up-to-date, and operated there? Johannes M. Biermann, President & COO of aicas, explains how it works and describes the solution the company offers.

Elektronik: How can AI and ML models be trained and implemented?

Johannes M. Biermann: Model-based artificial intelligence is based on the creation of a system of complex algorithms that are designed to automatically recognize patterns, make predictions, or even make decisions independently. A widely used approach is supervised training as a method of machine learning (ML) applied to an artificial neural network (ANN). The training and application of such a model have fundamentally different requirements: for training, especially the initial training, it is important to provide a large amount of high-quality data from the intended use case. The better - meaning the more comprehensive and thorough - the data represents the use case in all possible environmental and system states, the better and more efficiently the model will function.

Training therefore usually takes place centrally in the cloud or in a data center. This is where the necessary computing resources and tools are available to develop models with realistic, domain-specific data sets. When training is completed, the models are then transferred to their target devices at the edge, where they are used for inference. During application, the so-called inference phase, in which the model is supposed to make an assessment or decision based on an input data stream, it is desirable or even critical, depending on the application, that execution takes place quickly or in a timely manner. aicas therefore pays particular attention to the following aspects of AI solutions:

➔ The initial collection of good training data;

➔ Secure data transfer from the target device at the edge to the training system in the cloud or the customer's private data center;

➔ The secure transfer of the model and the AI application that uses the model from development in the cloud or data center to the target device;

➔ The monitored control and execution of the AI application and model in the target device, particularly considering realtime aspects;

➔ The continuous maintenance, improvement, and enhancement of the system through operative data collection and software updates.

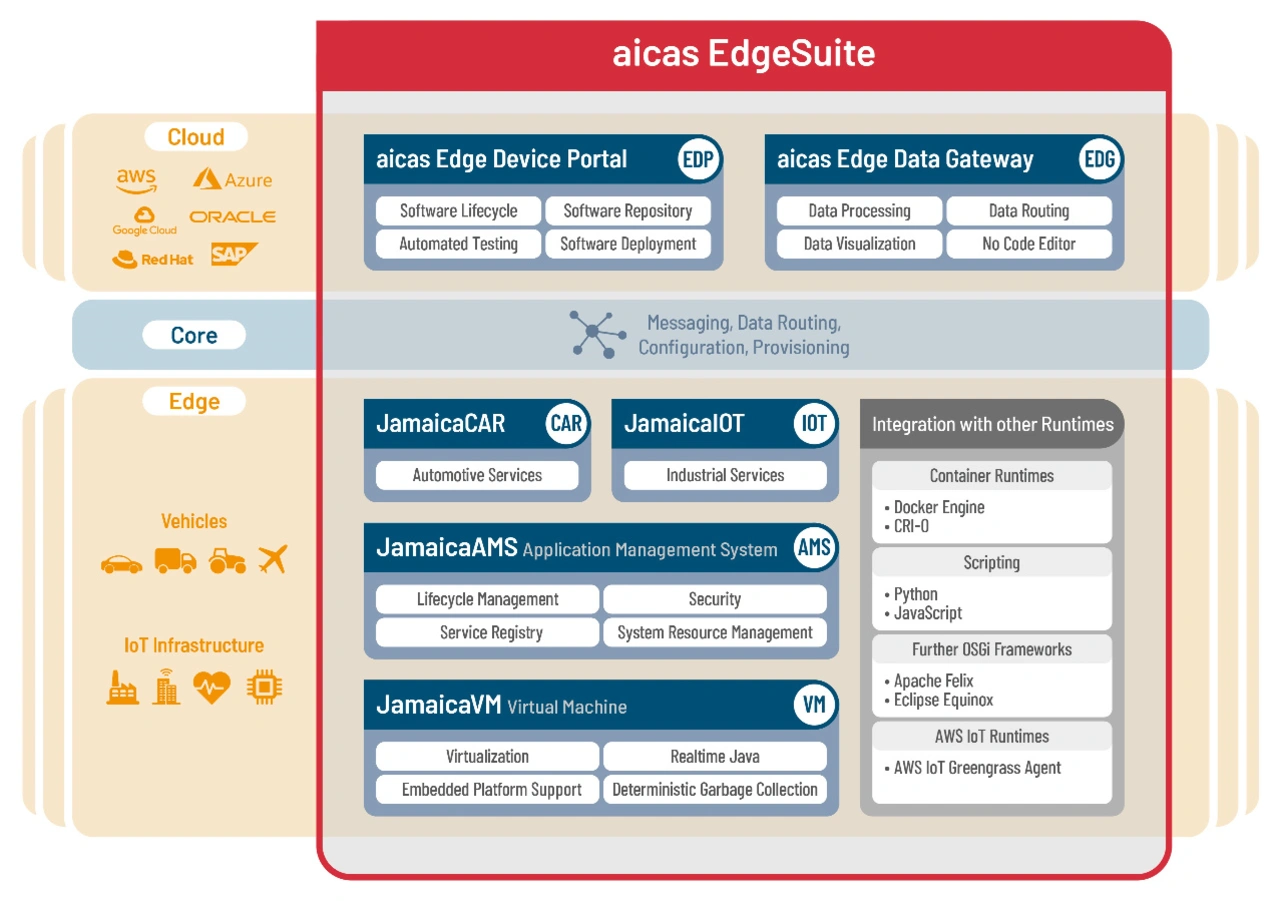

aicas achieves this with the modular “EdgeSuite” platform. The JamaicaAMS runtime environment, supplemented by a web-based, user-friendly interface for data and software management, enables fine-grained control and isolation of components. Models can be delivered, updated, or reset individually. Possible use cases include model-based prediction of driving behavior, wear and tear, temperature, or battery status for remote maintenance and predictive maintenance, as well as data-centric applications across devices and vehicle fleets.

What hardware and software are required for this?

As described, the requirements for training on the one hand and execution on the other are significantly different: entire hardware farms are used to train ML models. Cloud providers such as aicas' partner AWS offer services for creating your own models. However, there is now also an ecosystem of pre-trained models that already perform certain tasks, such as text recognition for natural language input, and only need to be adapted to the specific use case as services and open-source components. In summary, training usually requires more resources than are available in a typical developer system or even “at the edge.” There, at the edge, where the model is used for automatic inference, the focus of today's development is on specialized hardware: a powerful chip, such as the Vehicle Network Processor S32G3 from aicas' partner NXP, is supplemented with chips that specialize in executing neural networks and the matrix multiplications required for this. These are often processing cores, such as those used in graphics cards.

The main processor is tasked with bringing the input data stream to the model, monitoring the execution (inference), retrieving the result of the inference, and executing the application logic so that the system responds according to the desired behavior. In addition, the general function of the system is controlled and managed there, which, in this case, particularly includes management and updating of applications, remote control, and the provision of data for control and analysis for possible improvements. This requires a runtime environment such as JamaicaAMS, which ensures modularity, updatability, and stability at the edge device level. In addition, the cloud-based aicas Edge Device Portal (EDP) provides a central instance for managing models, updates, and device status—even for large, heterogeneous fleets. The solution integrates seamlessly with existing DevOps and CI/CD pipelines on the cloud, server, and backend sides and supports continuous maintenance and enhancement of the target system during operation.

To what extent does it make sense to distribute AI and ML applications across edge and cloud?

As explained earlier, training and inference have fundamentally different requirements; in this respect, division is preferable in the vast majority of cases. In addition to central control in the cloud, there are further advantages to combining multiple edge systems into a comprehensive instance: data from multiple target systems that was collected in one context but under different environmental and system conditions can be compared with each other and used for training. There are also interesting approaches to federated learning, in which data is shared even across company boundaries to achieve a common goal.

More interesting, and a topic of current research, is the division of the system that performs the estimates, inferences, and actions during operation itself: for resource-constrained devices in particular, a hybrid approach is interesting for some use cases, in which a small edge AI system recognizes an “I need to ask someone else” state and then communicates with a larger AI system running in a central, more powerful system or in the cloud. Basically, it's about optimizing the insights gained through training in highly scalable cloud infrastructures and applying them to decisions at the edge level, such as actuators or sensors. Conversely, edge-to-cloud insights and observations at the edge device level should be transferred to the cloud more quickly and comprehensively, with the aim of incorporating this data into ongoing training in the AI cloud data centers.

How can such a distribution be achieved?

With aicas EdgeSuite, models can be packaged as isolated components, versioned, and distributed in a targeted manner. Models and applications are delivered to defined target systems via the Edge Device Portal. This is controlled either automatically or condition-based - for example, only to vehicles with certain characteristics: a specific firmware or a specific software version or higher. The entire update process is encrypted, signed, and monitorable. Customers can derive actions and subsequent versions from transmitted feedback data on model performance, such as accuracy or resource consumption. The update process is designed to run without interrupting the operation of an operational system or process, and to ensure integrity, security, and traceability at all times.

Which aspects of AI and ML applications should run at the edge, which rather in the cloud?

Inference processes that require a local, fast, and reliable response or that need to keep data local for data protection reasons should primarily take place at the edge. These include, for example, realtime anomaly detection, machine condition monitoring, and image analysis in vehicles. Incidentally, these aspects apply equally to commercial (e.g., industrial facilities), public (keyword: smart city), and military applications (example: drones) - all areas, in which aicas supports its customers. In the cloud, on the other hand, tasks such as training, data preparation, and processing can also run as data aggregation across multiple devices or as cross-fleet analysis and simulation. The cloud is also particularly useful for more comprehensive optimizations. For example, when a fleet operator analyzes the behavior of models across different locations or usage scenarios.

What could and should the according Edge-to-Cloud-architectures look like?

The requirements for a robust Edge-to-Cloud architecture are:

➔ Uniform, standardized data models, such as the Vehicle Signal Specification VSS of COVESA;

➔ Self-explanatory, cross-platform data solutions, such as EDG with JamaicaIOT;

➔ Secure Edge-Systems—keywords: hardware security as root and a chain of trust anchored in the user's security architecture;

➔ Secure communication channels—anchored in the user's security architecture, for example in the form of a public key infrastructure and thus authentication of all hardware and software components;

➔ Robust lifecycle management for application and models - for example EDP with JamaicaAMS. Version management, authenticated components, role-based and restrictive access rules;

➔ A modular software platform with commercially available, standardized interfaces that can bridge heterogeneous systems. Example: JamaicaAMS and JamaicaVM with Java, Realtime Java, and OSGi APIs.

With the combination of Edge Device Portal, JamaicaAMS, and Edge Data Gateway, aicas offers a complete structure for this. Our architecture has already been used in projects with AWS and Swissbit - including for condition-based OTA campaigns in safety-critical applications.

How can AI and ML applications distributed across edge and cloud be synchronized?

aicas use cases often involve moving target systems with intermittent connectivity. A car in a tunnel is a good example. For the architecture and design of such solutions, it is therefore essential that the managing backend and the remote edge system can handle divergent states. Many approaches from the IoT can be applied, adapted, or transferred. The most important element is an autonomous software agent that can perform operational functions even without a connection to the backend and ensures that synchronization takes place as soon as the connection is reestablished. The agent also helps to implement the requirements for robustness during software updates. In aicas’ solutions, this is supplemented by clever packaging, which enables authentication and authorization for the use of a package by specific users in a specific system, through version management and through “live bill of material” listings of all components of the system. Finally, aicas uses lightweight virtualization in the target system, which prevents software components from deliberately or unintentionally crippling the system, while at the same time enabling the use of diverse and powerful debugging tools for the system developers, also as remote diagnostics.

What solutions does aicas offer for the implementation and application of AI and ML models at the edge and in the cloud?

aicas offers a modular complete solution: the Edge Device Portal can be used to manage, version, and securely deliver (AI) models and applications. The JamaicaAMS runtime environment ensures that applications run in an isolated, flexible, and fault-tolerant manner in embedded devices, namely at the edge. The Edge Data Gateway supports intelligent data selection, visualization, and preprocessing for machine learning. A practical example is our collaboration with Swissbit: here, aicas EdgeSuite is used to distribute pre-trained AI models robustly and securely - including encrypted updates, the root of trust in a physical memory element (secure element), and “live” status feedback. The project shows how secure distribution can be reliably implemented in a use case for distributed AI in safety-critical applications.

How can AI and ML models be updated at the edge?

Models are distributed via the Edge Device Portal in the same way as software components. The system automatically checks the signature, target device, compatibility, and permissions. The solution is designed so that updates can be carried out without interrupting operations and are protected against manipulation and malfunctions.

What software tools does this require?

Required are JamaicaAMS as a modular execution environment in the target system, Edge Device Portal as a software management and distribution platform, and optional integration into MLOps pipelines with CI/CD, for example via Jenkins, GitLab Pipelines, or AWS CodePipeline. In addition, aicas supports the use of established ML tools such as Keras/TensorFlow, Python-based frameworks, and customer-specific mechanisms for monitoring execution and model quality in the field.

What needs to be considered regarding safety and security?

In general, but especially in regulated industries such as automotive or medical technology, it is essential that model and software updates are robust, secure, transparent, and traceable. aicas relies on standardized state-of-the-art security mechanisms, including:

➔ Integration of aicas-edge-components into a chain of trust;

➔ TLS-encrypted communication with mutual authentication in a customer-managed public key infrastructure;

➔ Signature verification for validation, authentication, and authorization of all (software) packages;

➔ Targeted and secure logging and reporting of all update processes;

➔ Static and dynamic descriptions of the system for continuous comparison and misuse detection;

➔ Continuous (CVE) screening of aicas software components and their dependencies.

Our approach not only protects against unauthorized access and manipulation, but also ensures operational stability. The protection of sensitive model data during transmission and storage is continuously ensured.

What is aicas' solution for this?

aicas offers a closed system for the secure, scalable, and controlled deployment and operation of AI models at the edge. The component-based approach allows updates to be performed securely in isolated environments. Combinations with hardware security modules, as in the Swissbit use case, enable the system for particularly security-critical applications. The realtime capabilities of aicas' JamaicaVM make the system suitable for time-critical applications. The solution not only ensures that an AI system can be operated robustly, but also that it can be continuously maintained, secured, and improved with real operating data throughout its lifecycle. Our solution protects sensitive information, integrates with existing systems, software, and development tools, and provides operational transparency - from code development or model training to runtime in the device.

The questions were asked by Andreas Knoll.