New High End CPU

Arm Cortex-A77 – faster even without Moore's Law

Fortsetzung des Artikels von Teil 1

Further development of the NPU

In early 2018, Arm announced his "Trillium Project", a processor IP for machine learning based on neural networks or NPU (Neuronal Networks Processing Unit). The NPU is intended to complement CPUs and GPUs in neural network operations, there is also an update here.

In contrast to data center-based approaches, Arm wants to make machine learning possible "at the edge", i.e. directly in the end device. At first glance, this has numerous advantages, such as lower latency times, since data transfer from and to the data center is no longer necessary, and cost reduction, for example by eliminating the need for (wireless) data transfer. Another aspect is data security, since the data no longer leaves the end device and can therefore no longer be intercepted by hackers in the data center itself or on the way there.

The challenge with edge computing, however, is that the computation-intensive operations important for neural networks, such as matrix multiplications on conventional CPU and GPU architectures, cannot be carried out, or can only be carried out with enormous hardware input, because neither CPU nor GPU microarchitectures have been designed for this type of workload (e.g. two-dimensional MAC operations in the case of matrices). In response to these challenges, special NN processors were developed.

Arm's machine learning processor is based on a new architecture designed specifically for neural networks. It is scalable from mobile phones to data centers and is designed to cover three key aspects relevant to this type of workload: Efficiency in the calculation of convolution, efficient data shifting and sufficiently simple programmability. In addition, there are 16 "compute engine" units for pure data calculation, a memory subsystem and a programmable block for sequence control consisting of a microcontroller and a DMA engine. Convolutional computations typically focus on 8-bit data types that have established themselves as standard in machine learning applications, where internal operations are performed with varying degrees of precision. The data throughput, according to Arm, is 4 TOP/s for implementation in TSMC's 7 nm process and use of int 8 data, and 2 TOP/s for int 16. Typical patterns with 16 bit activation, 8 bit weighting, and 16x16 operations reduce throughput to 1 TOP/s.

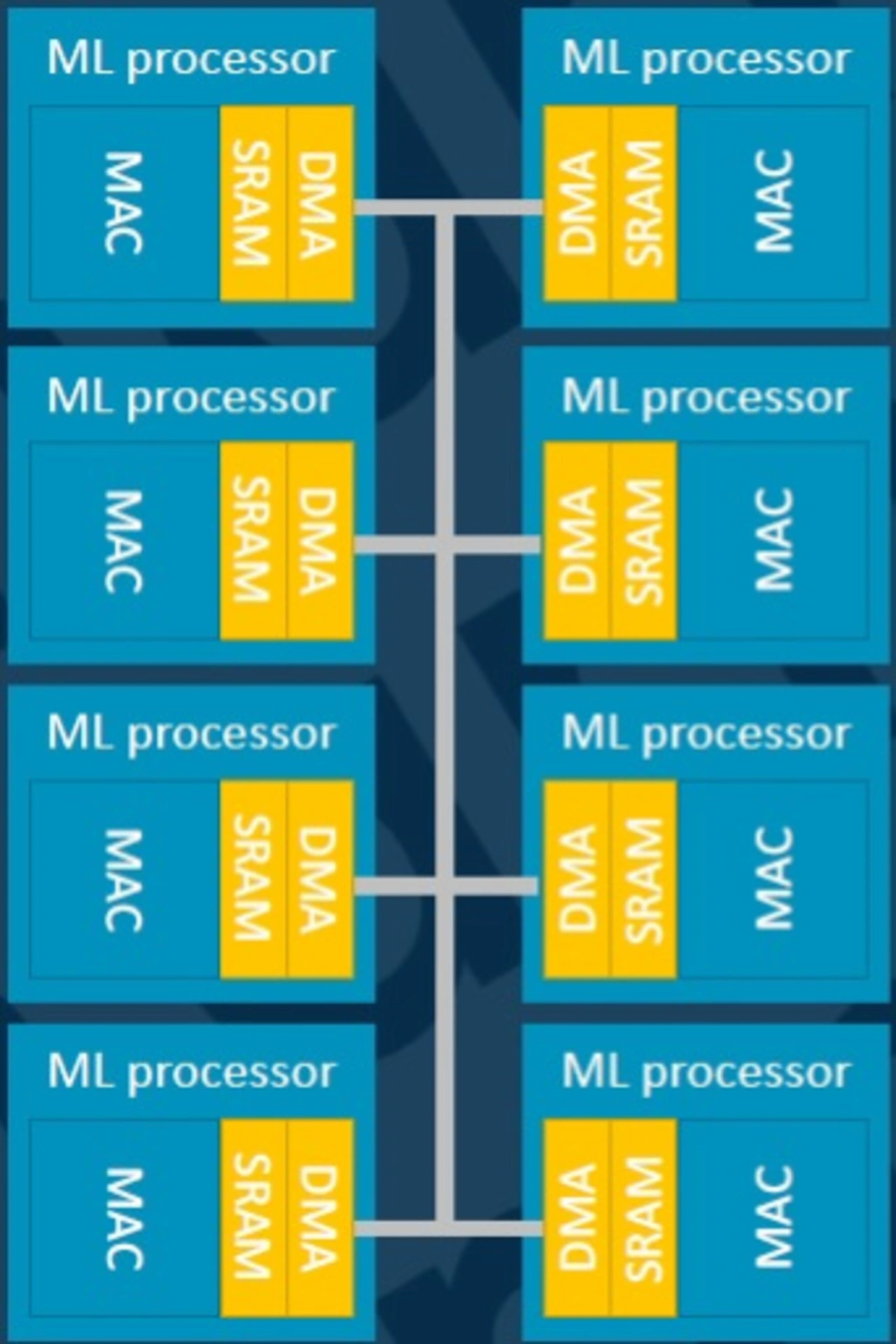

When the NPU was introduced in 2018, Arm assumed a power consumption of 3 TOP/W, and the IP has since been further developed to the point where customers are promised 5 TOP/W. The new IP is now also available as an option. Remarkable is the utilization of the MAC engines of up to 93 % (Inception v3 - a well-known image classification CNN - with Winograd technologies), furthermore up to 8 NPUs in a multicore configuration can be connected up to 8 NPUs in a closely connected cluster to achieve a peak throughput of 32 TOP/s (Figure 4). The NPUs can be interconnected non-coherently, e.g. in order to run several inference types in parallel, whereby several address spaces are then also supported. It is also possible to run a neural network on all cores in coherent mode to maximize the throughput e.g. for the analysis of high-resolution 4K images.

The SRAM is a common block with a size of 1 MB, which serves as a local buffer for the calculations of the compute engines. Their function blocks operate at different levels of the neural network model, for example convolution computations, weight decoders, etc. Convolution calculations are performed in a 128-bit wide MAC unit.

Each compute engine has its own local memory used by the modules to process DNN models (DNN-Deep Neuronal Network). The process is typical for DNN implementations, starting with the weighting of incoming data, processing via the MAC convolution engine, and processing the results by the programmable layer engine (PLE).

Development for future NN

The so-called Programmable Layer Engine (PLE) is intended to keep the architecture flexible with regard to the ongoing development of machine learning. Whether the current models will still be used in years to come is anything but certain once sufficient experience has been gained with the current models. The PLE enables the ML processor to integrate new operators via vector- and NN-specific commands and processes the results of the MAC engines before the data is written back into the SRAM.

Another challenge in edge computing is the limited data throughput to and from the chip and the available power budget; in particular, external DRAM can generate a similarly high power consumption as the ML processor itself. Arm's architecture therefore uses common techniques to losslessly compress feature maps by hiding the frequent occurrence of repetitive zeros in a given 8×8 block. SRAM, which stores intermediate results and data, also consumes a large amount of energy, so it takes every opportunity to reduce the SRAM capacity required. For 16 compute engines, Arm sees a requirement of 1 MB of SRAM.

To further reduce memory requirements, the weights are also compressed and reduced. Analogous to a biological synapse in the brain, the weights represent the knowledge in a neural network. The reduction of neurons, which would not have a significant influence on the final result, allows the hardware to skip certain calculations and thus reduces the number of DRAM calls. Arm claims that compression ratios of up to 3 are possible.

To reduce the number of calculations, Winograd technologies (Terry Allen Winograd, a US computer scientist known for research on Artificial Intelligence) can be used, which can reduce the area and energy consumption of networks such as Inception v1-v4, Resnet v1/v2, VGG16 and YOLOv2 by up to 50%.

But there should also be implementations in 16 nm. In addition to the maximum configuration with 16 compute engines and 1 MB SRAM, there will also be smaller variants later, even a minimum configuration with only one compute engine is available. In terms of silicon area, the NPU with 16 engines will occupy slightly more than a Cortex-A55. Compared to the brand new Cortex-A77 application processor, the ML processor achieves 64 times higher computing power measured in MAC operations per core and clock cycle.

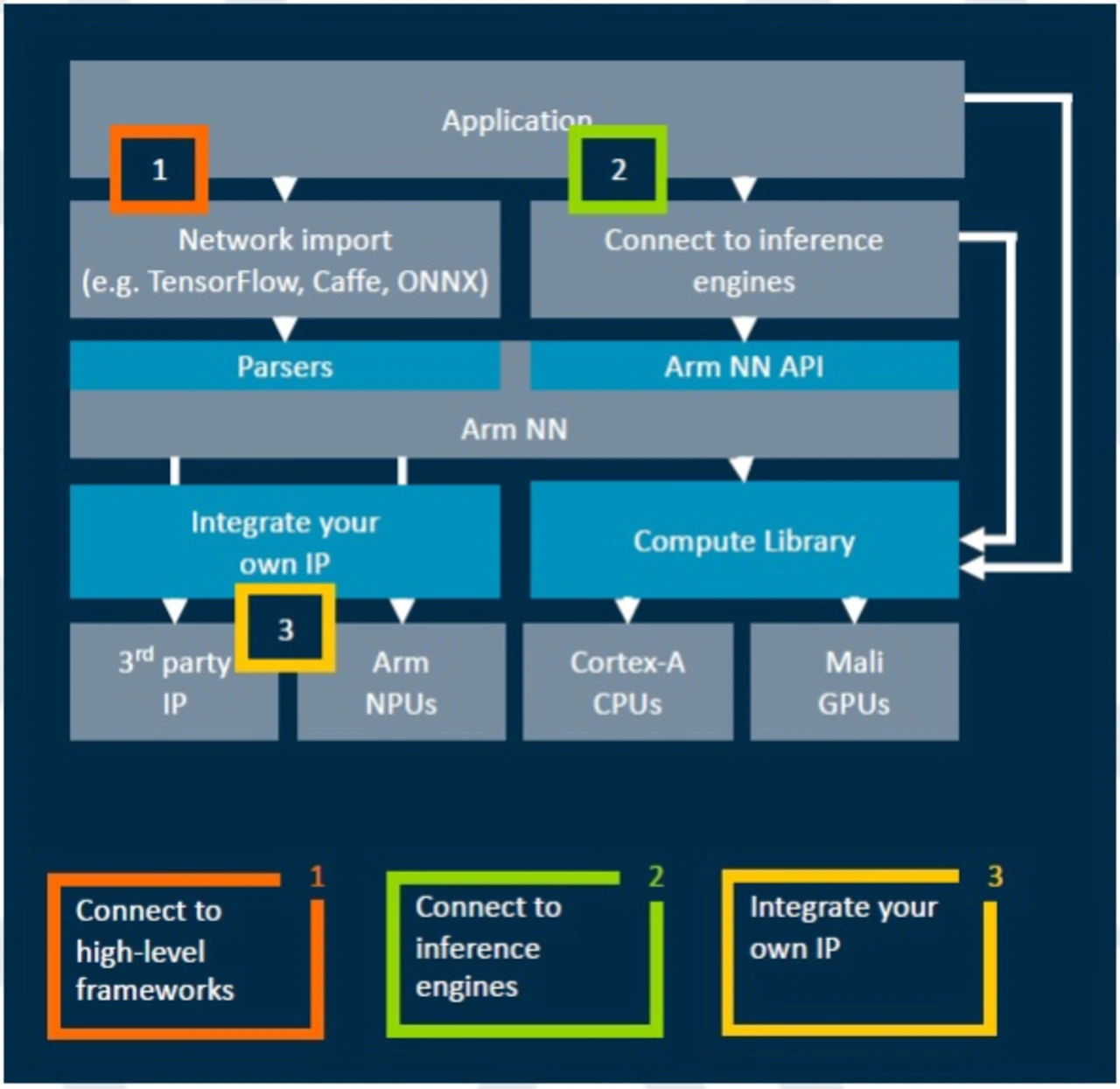

Arm wants to serve workloads for NPUs, CPUs and GPUs with a common software library. The core of this is "Arm NN", a kind of bridge between the common third-party neural network frameworks such as TensorFlow, Caffe and Android NNAPI as well as the various arm CPUs and GPUs (Fig. 5). Code can be written generically and executed on any available processing unit. For example, a budget-oriented device with traditional CPU and GPU setup can execute the same NN code as a high-end device with a discrete ML processor - but slower. Arm also provides support for third-party IP that can be integrated into the stack as needed. To date, Arms NN contains approximately 445,000 lines of code and has been developed over 120 person-years. Arm estimates that it is already being used in over 250 million Android devices. Through optimized implementations by Arms partners, Arms NN is expected to be significantly faster than other NN frameworks: For example, if you look at a Cortex A processor, Arm NN is 1.5x faster than resNet-50 and even 3.2x faster than Inception v3.

In a way, ArmNN follows the same approach as Nvidia's Cuda platform, which targets a number of GPUs. These GPUs are similar, but each has small differences that are obscured by Cuda support. A proprietary framework makes it difficult for developers to switch to different platforms. Cuda does more than just artificial intelligence (AI) and ML applications. Developers targeting ML tools such as TensorFlow can usually move from platform to platform, including the ML processor of Arm.

- Arm Cortex-A77 – faster even without Moore's Law

- Further development of the NPU

- Conclusion